Value alignment

AI should be designed to align with the norms and values of your user group in mind.

AI works alongside diverse, human interests. People make decisions based on any number of contextual factors, including their experiences, memories, upbringing, and cultural norms. These factors allow us to have a fundamental understanding of “right and wrong” in a wide range of contexts, at home, in the office, or elsewhere. This is second nature for humans, as we have a wealth of experiences to draw upon.

Today’s AI systems do not have these types of experiences to draw upon, so it is the job of designers and developers to collaborate with each other in order to ensure consideration of existing values. Care is required to ensure sensitivity to a wide range of cultural norms and values. As daunting as it may seem to take value systems into account, the common core of universal principles is that they are a cooperative phenomenon. Successful teams already understand that cooperation and collaboration leads to the best outcomes.

01

Consider the culture that establishes the value systems you’re designing within. Whenever possible, bring in policymakers and academics that can help your team articulate relevant perspectives.

02

Work with design researchers to understand and reflect your users’ values. You can find out more about this process here.

03

Consider mapping out your understanding of your users’ values and aligning the AI’s actions accordingly with an Ethics Canvas. Values will be specific to certain use cases and affected communities. Alignment will allow users to better understand your AI’s actions and intents.

“If machines engage in human communities as autonomous agents, then those agents will be expected to follow the community’s social and moral norms. A necessary step in enabling machines to do so is to identify these norms. But whose norms?”

- The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

To consider

- If you need somewhere to start, consider IBM’s Standards of Corporate Responsibility or use your company’s standards documentation.

- Values are subjective and differ globally. Global companies must take into account language barriers and cultural differences.

- Well-meaning values can create unintended consequences. e.g. a tailored political newsfeed provides users with news that aligns with their beliefs but does not holistically represent the gestalt.

Questions for your team

- Which group’s values are expressed by our AI and why?

- How do we agree on which values to consider as a team? (For more reading on moral alignment, check here.)

- How do we change or adjust the values reflected by our AI as our values evolve over time?

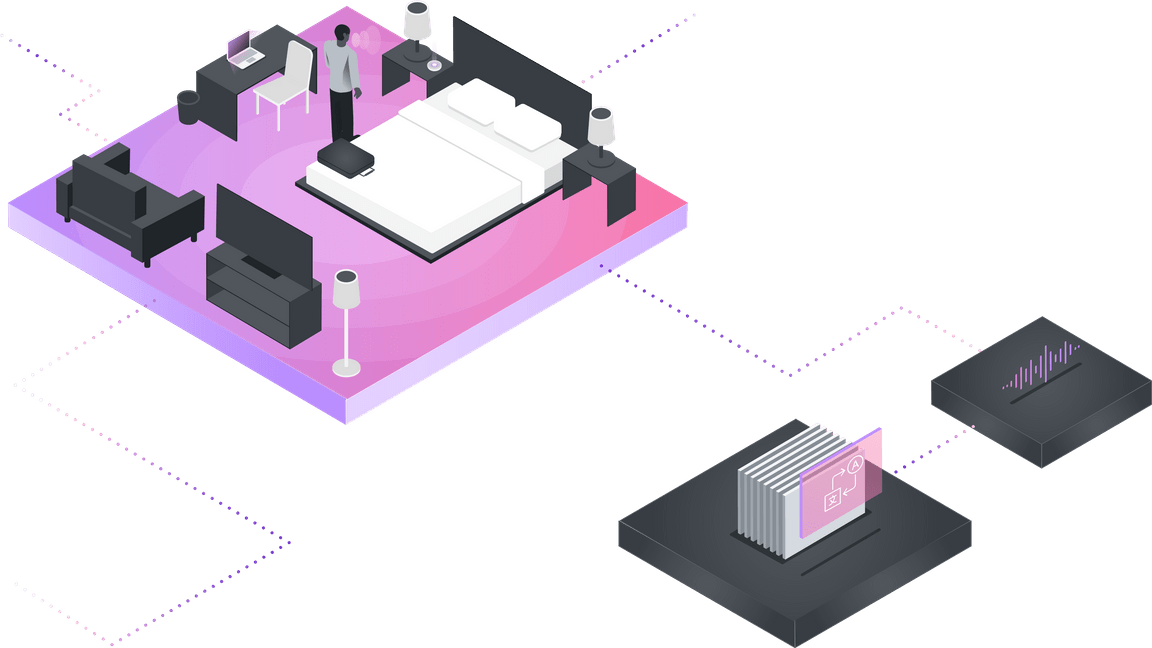

Value alignment example

- The team understands that for a voice-activated assistant to work properly, it must be “always listening” for a wake word. The team makes it clear to guests that the AI hotel assistant is designed to not keep any data, or monitor guests, in both cases without their knowledge, even if it is listening for a wake word.

- The audio collected while listening for a wake word is auto-deleted every 5 seconds. Even if a guest opts in, the AI does not actively listen in on guests unless it is called upon.

- The team knows that this agent will be used in hotels across the world, which will require different languages and customs. They consult with linguists to ensure the AI will be able to speak in guests’ respective languages and respect applicable customs.