Explainability

AI should be designed for humans to easily perceive, detect, and understand its decision process.

In general, we don’t blindly trust those who can’t explain their reasoning. The same goes for AI, perhaps even more so. As an AI increases in capabilities and achieves a greater range of impact, its decision-making process should be explainable in terms people can understand. Explainability is key for users interacting with AI to understand the AI’s conclusions and recommendations. Your users should always be aware that they are interacting with an AI. Good design does not sacrifice transparency in creating a seamless experience. Imperceptible AI is not ethical AI.

01

Allow for questions. A user should be able to ask why an AI is

doing what it’s doing on an ongoing basis. This should be clear

and up front in the user interface at all times.

02

Decision making processes must be reviewable, especially if the

AI is working with highly sensitive personal information data

like personally identifiable information, protected health

information, and/or biometric data.

03

When an AI is assisting users with making any highly sensitive

decisions, the AI must be able to provide them with a sufficient

explanation of recommendations, the data used, and the reasoning

behind the recommendations.

04

Teams should have and maintain access to a record of an AI’s

decision processes and be amenable to verification of those

decision processes.

“IBM supports transparency and data governance policies that will ensure people understand how an AI system came to a given conclusion or recommendation. Companies must be able to explain what went into their algorithm’s recommendations. If they can’t, then their systems shouldn’t be on the market.“

- Data Responsibility at IBM

To consider

- Explainability is needed to build public confidence in disruptive technology, to promote safer practices, and to facilitate broader societal adoption.

- There are situations where users may not have access to the full decision process that an AI might go through, e.g. financial investment algorithms.

- Ensure an AI system’s level of transparency is clear. Users should stay generally informed on the AI’s intent even when they can’t access a breakdown of the AI’s process.

Questions for your team

- How do we build explainability into our experience without detracting from user experience or distracting from the task at hand?

- Do certain processes or pieces of information need to be hidden from users for security or IP reasons? How is this explained to users?

- Which segments of our AI decision processes can be articulated for users in an easily digestible and explainable fashion?

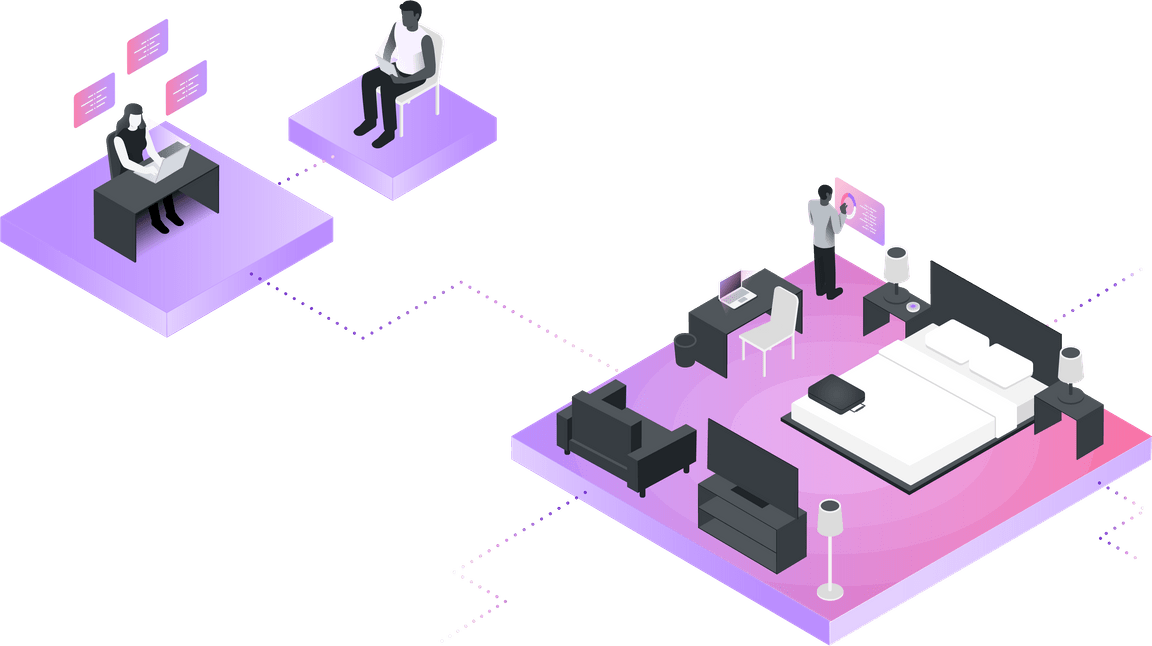

Explainability example

- Per GDPR (General Data Protection Regulation), a guest must explicitly opt in to use the hotel room assistant. Additionally, they will be provided with a transparent UI to show how the AI makes its recommendations and suggestions.

- A researcher on the team, through interviews with hotel guests, understands that the guests want a way to opt into having their personal information stored. The team enables a way for the AI to provide guests (through voice or graphic UI) with options and the ability for the system to gather pieces of information with consent.

- With permission, the AI offers recommendations for places to visit during their stay. Guests can ask why these recommendations are made and which set of data is being utilized to make them.