How To

Summary

This document shows the requirements of creating a vNIC in IBM Power Systems, and the different scenarios that can be implemented.

Objective

Steps

1.1 vNIC Introduction

The SR-IOV implementation on Power servers has an additional feature called vNIC (virtual Network Interface Controller).

vNIC is a type of virtual Ethernet adapter, that is configured on the LPAR.

Each vNIC is backed by an SR-IOV logical port (LP) that is available on the VIO server.

The key advantage of placing the SR-IOV logical port on the VIO, that it makes the client LPAR eligible for Live Partition Mobility.

(Although the backing device resides remotely, through a so called LRDMA (Logical Redirected DMA) technology, The vNIC can map its transmit and receive buffers directly to the remote SR-IOV logical port).

The vNIC configuration happens on the HMC in a single step (only in the Enhanced GUI). When adding a vNIC adapter to the LPAR, it will create all necessary adapters automatically on VIOS (SR-IOV logical port, vNIC server adapter) and on LPAR (vNIC client adapter). From the user perspective no additional configuration is needed at VIOS side.

1.2 vNIC consideration

It is really just 1 step on the Enhanced GUI interface:

Choose LPAR --> Virtual NICs --> Add Virtual NIC (choose a VIO server, capacity)

In addition, a Port VLAN ID (PVID) may be configured for an SR-IOV logical port to provide VLAN tagging and untagging.

Note: It is always preferred to label the SR-IOV physical ports, so it can ease the vNIC operation and avoid any confusion during vNIC creation and the physical/logical port selection to be the backing device in VIOS.

You can add/view/change/remove virtual NICs to a partition using the Hardware Management Console.

Before you add a virtual NIC, ensure that your system meets the prerequisites:

-> If the client partition is running:

-- The VIOS that hosts the virtual NIC is running with an active RMC connection.

-- The client partition has an active RMC connection.

-> If the client partition is shut down:

-- The VIOS that hosts the virtual NIC is running with an active RMC connection.

NOTE:

HMC automated management of the backing devices requires RMC connection to the hosting VIOS,

i.e You will need the RMC connection working between the VIO(s) and the HMC.

1.3 vNIC theoretical procedures

>> To add virtual NICs using the HMC, complete the following steps:

a. In the Properties pane, click Virtual NICs. The Virtual NIC page opens.

b. Click Add Virtual NIC. The Add Virtual NIC - Dedicated page opens with the SR-IOV physical ports listed in a table.

c. Click Add Entry or Remove Entry to add or to remove backing devices for the Virtual NIC.

>> To view virtual NICs using the HMC, complete the following steps:

a. In the Properties pane, click Virtual NICs. The Virtual NICs page opens with the virtual NIC

adapters listed in a table.

b. Select the virtual NIC from the list for which you want to view the properties.

c. Click Action > View. The View Virtual NIC page opens.

d. View the properties of the virtual NIC backing device, the MAC address settings, and VLAN I

settings for the virtual NIC.

e. Click Close.

>> To change virtual NICs using the HMC, complete the following steps:

a. In the Properties pane, click Virtual NICs. The Virtual NICs page opens with the virtual NIC

adapters listed in a table.

b. Select the virtual NIC from the list for which you want to change the properties.

c. Click Action > Modify. The Modify Virtual NIC page opens.

d. View the properties of the backing device, the MAC address settings, and VLAN ID settings for

The virtual NIC.

e. You can change the Port VLAN ID and PVID priority for the selected virtual NIC.

f. Click Close.

>> To remove virtual NICs using the HMC, complete the following steps:

a. In the Properties pane, click Virtual NICs. The Virtual NICs page opens with the virtual NIC

adapters listed in a table.

b. Select the virtual NIC that you want to remove.

c. Click Action > Remove. A delete confirmation message appears, then click OK to remove the vNIC.

1.4 vNIC procedures from HMC enhanced GUI

To add a new vNIC adapter to a partition, open Partition Properties and select Virtual NICs task from the Menu Pod, under the Virtual I/O section.

Use the following to add a virtual network interface controller (NIC) to the client partition.

Before you create a virtual NIC, ensure that you meet the following prerequisites if the client partition is running:

•The Virtual I/O Server (VIOS) that hosts the virtual NIC is running with an active Resource Monitoring and Control (RMC) connection.

•The client partition has an active RMC connection.

Ensure that you meet the following prerequisites if the client partition is shutdown:

•The Virtual I/O Server (VIOS) that hosts the virtual NIC is running with an active RMC.

When you add the virtual NIC, you can configure the backing device, VIOS that hosts the virtual NIC, and virtual NIC capacity.

The SR-IOV physical port table lists all the physical ports that you can select to configure a backing device for the virtual NIC. The SR-IOV physical port must be on a running SR-IOV adapter in the shared mode and must not have a diagnostic logical port configured. The SR-IOV physical port must have enough available capacity to create an Ethernet logical port with minimum capacity. The SR-IOV physical port must not have reached its Ethernet logical port limit.

You can choose the VIOS to host the backing device for the virtual NIC. Only Virtual I/O Servers that meet the following requirements are displayed in the VIOS list:

•The VIOS version must be 2.2.4.0.

•The VIOS is running with an active RMC connection to the management console.

In addition, if the client partition is shutdown, Virtual I/O Server (VIOS) that are shutdown are also displayed in the VIOS list.

You can specify the capacity of the virtual NIC as a percentage of the total capacity of the SR-IOV physical port. You can also configure the advanced settings, such as allowed MAC addresses and VLAN IDs.

When adding a Virtual NIC to a running partition, the Device Number (vNIC adapter ID) will be unavailable. Run cfgmgr on AIX and refresh the Virtual NICs page on the HMC to update the device name.

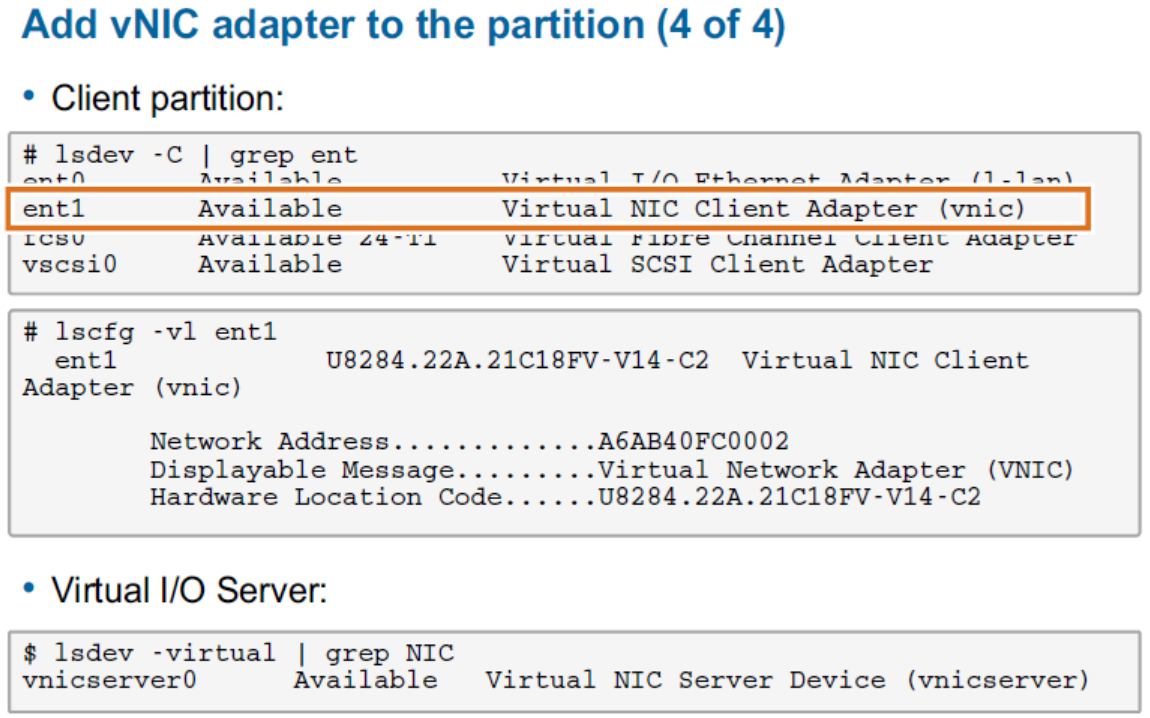

AIX device management commands can be used to list the available Virtual NIC Client Adapter on the client partition. When adding a vNIC to a client partition, a Server Virtual NIC Adapter will be created automatically on the Virtual I/O Server.

During LPM or Remote Restart operations, the HMC handles the creation of the vNIC server and backing devices on the target system and cleanup of devices on the source system when LPM completes. The HMC also provides auto-mapping of devices (namely selecting suitable VIOS and SR-IOV adapter port to back each vNIC device). The SR-IOV port label, available capacity, and VIOS redundancy are some of the items used by the HMC for auto mapping. Optionally users have the choice of specifying their own mapping manually.

1.5 vNIC procedures from HMC command line

You can manage and deal with several things from HMC command line interface, which sometimes saves a lot of time and efforts especially when create/add/remove repetitive stuffs on your HMC.

1.5.1 vNIC dynamic setup by HMC command line

Similarly when you create your vNIC adapter for a certain LPAR using the HMC enhanced GUI dynamically, you can still use the chhwres command to dynamically add the vNIC adapter to the LPAR specifying the backing SR-IOV device adapter id, phys_port id, capacity, hosting VIOS, priority, etc..

First of all, you would need make sure the SR-IOV adapters are shared (modify them from dedicated mode to shared mode from Hardware virtualized IO tab.

Then you have to make sure the available SR-IOV ports that have a connection link up (state=1) by running the following from HMC:

$ lshwres -m SYS_NAME -r sriov --rsubtype physport --level ethc -F state phys_port_loc adapter_id phys_port_id --header | sort -n | egrep '^1|state'

State phys_port_loc adapter_id phys_port_id

1 U78D5.ND1.CSS36CA-P1-C1-C1-T1 1 0

1 U78D5.ND1.CSS36CA-P1-C4-C1-T2 2 1

1 U78D5.ND2.CSS3677-P1-C1-C1-T1 3 0

1 U78D5.ND2.CSS3677-P1-C4-C1-T2 4 1

1 U78D5.ND3.CSS35CA-P1-C1-C1-T1 6 0

1 U78D5.ND3.CSS35CA-P1-C4-C1-T2 7 1

1 U78D5.ND4.CSS36CB-P1-C1-C1-T1 9 0

1 U78D5.ND4.CSS36CB-P1-C4-C1-T2 10 1

Now, consider the LPAR name is mash_lpar, host VIOS VIOS1, the adapter id to be used as a backing device for the host is 1, and its phys_port id is 0, the command should be:

chhwres -r virtualio -m SYS_NAME -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/VIOS1//1/0/20/\""

sriov/VIOS_LPAR_NAME //sriov-adapter-ID/sriov-phys_port-ID/[capacity][/[failover-priority]/

The word sriov in the beginning of the previous line is just a keyword.

The previous command example will dynamically create a vNIC adapter for mash_lpar hosted by VIOS1 on SR-IOV physical adapter 1 and its phys_port id 0 with a capacity of 20%.

The priority failover here is not set (however the HMC will set it to its default value which is 50. If you need to specify a value, it should be from 1 to 100 with 1 being the highest priority).

In case you need to specify it, you can follow the below:

$ chhwres -m MY_SYS -r virtualio --rsubtype vnic -p mash_lpar -s 35 -o s -a "backing_devices+= sriov/VIOS1//1/0/20/1\""

Where 20 is the capacity, 1 is the failover-priority. It is not important while using only one hosting VIOS.

The same also for the percentage, if you have not set it, the HMC will set it to the minimum (which is 2%).

In case you want to specify the same chhwres command with two VIOS as a vNIC failover setup, you can run it this way:

$ chhwres -r virtualio -m S2-9080-M9S-SN78410E8 -o a -p test-lpar --rsubtype vnic -a "\"backing_devices=sriov/VIOS1//1/0/20/1,sriov/VIOS2//7/1/20/50\""

The above command will create a vNIC from both adapter id 1 with phys_port 0 (hosted by VIOS1) with priority 1, and adapter id 7 with phy_port 1 (hosted by VIOS2) with priority 50.

A real example of creating 8 vNIC adapters with both VIO servers failover feature for mash_lpar requires an active RMC connection for the hosting VIO(s) upon the below bold SR-IOV ports:

lshwres -m MY_SYS -r sriov --rsubtype physport --level ethc -F state phys_port_loc adapter_id phys_port_id phys_port_label phys_port_sub_label --header | sort -n | egrep '^1|state'

state phys_port_loc adapter_id phys_port_id phys_port_label phys_port_sub_label

1 U78D5.ND1.CSS363A-P1-C1-C1-T1 1 0 ND1C1T1 V1

1 U78D5.ND1.CSS363A-P1-C4-C1-T2 2 1 ND1C4T2 V1

1 U78D5.ND2.CSS368E-P1-C1-C1-T1 4 0 ND2C1T1 V2

1 U78D5.ND2.CSS368E-P1-C4-C1-T2 5 1 ND2C4T2 V2

1 U78D5.ND3.CSS3428-P1-C1-C1-T1 7 0 ND3C1T1 V1

1 U78D5.ND3.CSS3428-P1-C4-C1-T2 8 1 ND3C4T2 V1

1 U78D5.ND4.CSS36BF-P1-C1-C1-T1 12 0 ND4C1T1 V2

1 U78D5.ND4.CSS36BF-P1-C4-C1-T2 11 1 ND4C4T2 V2

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS1//1/0/30/1,sriov/S1-VIOS2//11/1/30/20\""

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS2//4/0/30/1,sriov/S1-VIOS1//8/1/30/20\""

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS2//4/0/30/1,sriov/S1-VIOS1//8/1/30/20\""

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS1//1/0/30/1,sriov/S1-VIOS2//11/1/30/20\""

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS1//8/1/30/1,sriov/S1-VIOS2//4/0/30/20\""

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS2//11/1/30/1,sriov/S1-VIOS1//1/0/30/20\""

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS2//11/1/10/1,sriov/S1-VIOS1//1/0/10/20\""

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS1//8/1/10/1,sriov/S1-VIOS2//4/0/10/20\""

Then it is very recommended to save the current configuration in the LPAR profile by running:

$ mksyscfg -r prof -m MY_SYS -o save -p mash_lpar -n default_lpar –force

Or just set the sync_curr_prof for any LPAR/VIOS, so any dynamic change just got synced into the LPAR for any next activation:

$ chsyscfg -r lpar -m SYS_NAME -i "name=LPAR_NAME,sync_curr_profile=1"

Bear in mind, that VLAN IDs can be added once you create the vNIC adapter. It is very easy to modify the properties of your vNIC adapter from the enhanced HMC GUI interface of your LPAR.

You can also set it while creating the the vNIC adapter by HMC CLI either selecting a single VIO server or dual for a failover:

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "port_vlan_id=3,\"backing_devices=sriov/vios01//1/0/30/1,sriov/vios02//11/1/30/20\""

1.5.2 vNIC manual setup by HMC command line

You can still create the vNIC adapter for an LPAR, either backed up by single VIOS or dual VIOS manually, by adding directly the vNIC specifications into the LPAR profile.

Although the option exists in chsyscfg command line for even a single/dual VIOS hosts, whoever it does not exist as an option in the profile itself to create/delete, and it is not very recommended as once you create it and boot up your LPAR, it will get stuck in an error message saying the HMC cannot find the backing device due to no RMC connection between VIO(s) and the HMC).

The chsyscfg command to create the vNIC in the profile is so simple:

$ chsyscfg -r prof -m MY_SYS -i "name=default_profile,lpar_name=mash_lpar,\"vnic_adapters+=\"\"slot_num=35:backing_devices=sriov/VIOS1//1/0/20\""

This will create a vNIC adapter for mash_lpar partition, with a virtual slot number 35. The backing device host is your VIOS1 with adapter id 1 and phys_port 0, and a capacity of 20%. The priority will be 50 as a default value.

Similarly, you can manually create the vNIC from both VIOS (vNIC failover) using the same chsyscfg command:

$ chsyscfg -r prof -m MY_SYS -i "name=default_profile,lpar_name=mash_lpar,\"vnic_adapters+=\"\"slot_num=35:backing_devices=sriov/VIOS1//1/0/20/1,sriov/ VIOS2//7/1/20/2\""

1.6 vNIC different scenarios and setup methods

You can always choose among two different setup methods of vNIC redundancy, the available options are either vNIC with NIB or vNIC with failover setup.

1.6.1 vNIC setup with NIB

If 2 vNICs are created, which are coming from different VIOS, then high availability can be achieved by creating an etherchannel on top of these (one adapter is active, the other is backup)

# lsdev -Cc adapter

ent0 Available Virtual I/O Ethernet Adapter (l-lan)

ent1 Available Virtual NIC Client Adapter (vnic) <-- coming from VIOS1

ent2 Available Virtual NIC Client Adapter (vnic) <-- coming from VIOS2

After creating NIB (Network Interface Backup config) from AIX `smitty etherchannel` with an IP address, we can check status of vNIC adapters from AIX kdb prompt:

# echo "vnic" | kdb

|-----------------------------+---------+-------+------------ |

| pACS | Device | Link | State |

|----------------------------+-----------+-------+----------- |

| F1000A00328C0000 | ent1 | Up | Open |

|----------------------------+-------------------+----------- |

| F1000A00328E0000 | ent2 | Up | Open |

|----------------------------+-------------------+------------ |

If VIO1 goes down, The errpt will show the following entry:

59224136 0320144317 P H ent3 ETHERCHANNEL FAILOVER

The status can be shown from AIX kdb prompt:

# echo "vnic" | kdb

|----------------------------+------------------------------------------- |

| pACS | Device | Link | State |

|----------------------------+-----------+-----------+------------------ |

| F1000A00328C0000 | ent1 | Down | Unknown |

|----------------------------+------+----------------+------------------ |

| F1000A00328E0000 | ent2 | Up | Open |

|----------------------------+----------- +----------+------------------ |

1.6.2 vNIC failover setup

vNIC failover provides high availability solution at LPAR level. In this configuration, a vNIC client adapter

Can be backed by multiple logical ports to avoid a single point of failure.

At any time, only one logical port is connected to the vNIC client - similar to a NIB configuration, but only 1 adapter exists at the LPAR. If the active connection fails, a new backing device is selected by the Hypervisor.

Minimum prerequisites for vNIC failover:

- VIOS Version 2.2.5

- System Firmware Release 860.10

- HMC Release 8 Version 8.6.0

- AIX 7.1 TL4 or AIX 7.2

Creating vNIC failover configuration is simple:

Choose LPAR --> Virtual NICs --> Add Virtual NIC

The already existing vNIC adapters (without failover) do not need to be removed, those can be

Modified online.

With "Add entry" you can add new lines for backing devices (with priority value):

Auto Priority Failover: Enabled means, when the active backing device has not the highest priority

i.e: a higher priority device comes online, the hypervisor will automatically failover to this new device.

For failover Priority, the smaller number means higher priority.

The entstat command in AIX will show many details which VIOS is currently utilized for the network:

# entstat -d entX | tail

Server Information:

LPAR ID: 1

LPAR Name: VIOS1

VNIC Server: vnicserver1

Backing Device: ent17

Backing Device Location: U98D8.001.SRV3242-P1-C9-T2-S4

You can also use the command line, see the example:

$ chhwres -r virtualio -m MY_SYS -o a -p mash_lpar --rsubtype vnic -a "\"backing_devices=sriov/S1-VIOS1//8/1/10/1,sriov/S1-VIOS2//4/0/10/20\""

1.6.3 vNIC failover setup with NIB

If you are so curious about the redundancy and failover capabilities, you may use both failover and NIB combined setup together.

All what you need is the minimum prerequisites for vNIC failover:

- VIOS Version 2.2.5

- System Firmware Release 860.10

- HMC Release 8 Version 8.6.0

- AIX 7.1 TL4 or AIX 7.2

Then just proceed with the vNIC failover setup section (either using enhanced HMC GUI, or the chhwres command line) by specifying both of your hosting VIO servers, capacity percentages, backend devices and failover priorities.

Once that done, and you got your LPAR AIX system running, you can directly proceed in creating an EtherChannel on top of the vNIC adapters you created previously.

1.7 Pulling vNIC configuration

After creating the vNIC, you may pull the configuration info;

-> From VIOS:

$ lsmap -all -vnic

Name Physloc ClntID ClntName ClntOS

-------------------------------------------------------------------------------------------------------------------------------

vnicserver0 U8608.66E.21ABC7W-V1-C32897 3 vnic_lpar AIX

Backing device:ent14

Status:Available

Physloc:U76D7.001.KIC9871-P1-C9-T2-S6

Client device name:ent1

Client device physloc:U8608.66E.21ABC7W-V3-C3

-> From client LPAR:

# lsdev -Cc adapter

ent0 Available Virtual I/O Ethernet Adapter (l-lan)

ent1 Available Virtual NIC Client Adapter (vnic)

Thank you very much for taking the time to read through this guide.

I hope it has been not only helpful but an easy read. If you have questions or you feel you found any inconsistencies, please don’t hesitate to contact me at:

ahdmashr@eg.ibm.com

Ahmed (Mash) Mashhour

Document Location

Worldwide

Was this topic helpful?

Document Information

Modified date:

01 November 2020

UID

ibm16357843