How To

Summary

Lots of information to help you make the move to VIOS 3.1 quickly and accurately including hints and tips.

Objective

Helping every one to upgrade their VIOS in function, performance and reliability.

Environment

All Power servers running PowerVM and Virtual I/O Servers

Steps

If you don't know "VIOS" is short for Virtual I/O Server, then you are probably on the wrong page.

REALLY NEW NEWS - May 2023

- Latest versions are

- VIOS 3.1.3

- VIOS 2.2.6.61 - The latest VIOS 2.2.6 version is always the recommended version to upgrade to get to 3.1.

This version is referred to in this article as 2.2.6.<latest-version>.

- VIOS Supported Software that you are allowed to install and not invalidate your IBM VIOS Support are here

- Web page like: VIOS Supported Applications (link fixed)

Damien Ferrand (IT Consultant and VIOS administrator) points out

- If upgrading from 2.2.6.41 to 3.1.0.10, the VIOS viosupgrade command does not work. Upgrade to 3.1.0.21 (the current latest VIOS 3 release). The problem is that the newer VIOS 2.2.6.41 has some package versions higher than the older 3.1.0.10.

- My rule-of-thumb is: Always upgrade straight to the latest VIOS 3.1

- Thanks to Damien for the excellent feedback

SSDPCM is removed during the migration to VIOS 3.1. It is a good opportunity to remove this old driver.

- Prerequisite OS Levels

- AIX 7.1 TL3

- AIX 7.2 any TL

- VIOS 2.2.4.0

- Comment

- The AIXPCM driver improvements include all significant functionality provided by SDDPCM.

- The AIXPCM driver has the advantage over SDDPCM of not requiring specific maintenance.

- All updates are applied as part of the Operating System Maintenance.

- More information:

- On the recommended Multi-path Driver to use on IBM AIX and VIOS When Attached to SVC and Storwize storage

- https://www-01.ibm.com/support/docview.wss?uid=ssg1S1010218

- Latest Multipath Subsystem Device Driver User's Guide:

Note: The term ![]() is used in this article because using the "m" word by itself triggers the IBM "big brother" thought police alarms!

is used in this article because using the "m" word by itself triggers the IBM "big brother" thought police alarms!

VIOS 3.1 - New Functions

- See Bob G Kovacs session on VIOS 3.1

- Slide deck of 35 slides and Replay of 90 minutes

- POWER/AIX Virtual User Group on 25 October 2018

- http://ibm.biz/powersystemsvug

- The Big Item is the VIOS moves to AIX 7.2. TL3 then smaller features include:

- The AIX update means the latest kernel and device drivers and so performance

- iSCSI disks are support and presented to client LPARs as vSCSI disks

- General clean up by removing unused packages for a smaller foot print and faster upgrades

- Shared Storage Pool further RAS improvements like repository disk auto replace

Upgrading to VIOS 3.1

- See Nigel Griffiths session on Upgrading the VIOS 3.1

- Slide deck of 100 slides and Replay of 90+ minutes from YouTube

- Power Virtual User Group on 21 November 2018

- http://tinyurl.com/PowerVUG

A summary of the key charts from the Upgrading to VIOS 3.1 Webinar is at the end of this article in the Questions and Answers section.

Upgrading strategies 1 - Don't Upgrade at All.

- New POWER9 server?

- Start on VIOS 3.1 & set up as normal

- Zero risk upgrade plan

- Evacuate the Server with Live Partition Mobility (LPM) -> no LPARs on the Server = no risk

- Install a fresh VIOS 3.1 then set up as normal

- Then, use LPM all the LPARs back to the original server

- Shared Storage Pool (SSP)

- Use LPM on the SSP LPARs from the server

- Remove this servers VIOS from SSP (cluster -rmnode)

- Perform a fresh installation of VIOS 3.1 then set up the configuration as normal

- Add server back into the SSP (cluster -addnode)

- Next, use LPM to move the LPARs back

- TESTED AND GOOD TO KNOW: A newly installed VIOS 3.1 can join a Shared Storage Pool of VIOS running 2.2.6.LATEST

VIOS 3.1 Pre-req

- POWER9, POWER8, and POWER7+ (known as the D models) all fully supported

- POWER7, POWER5, and older servers are not supported - probably works fine, there is no code to block that working) but not supported nor tested by IBM

- VIOS 2.2.5 - one more year of support

- VIOS 2.2.4 - support ends Q4 2018 - update/upgrade "As soon as possible" and upgrade to VIOS 3.1 while you have one of you dual VIOS down

- VIOS 2.2.3 or earlier - a dumb idea

Terminology

- “Update” is a minor improvement 2.2.5 to 2.2.6

- “Upgrade” is a major improvement 2.2.6 to 3.1

- VIOS 2.x.x.x based on AIX 6.1. For example, VIOS 2.2.6.LATEST = AIX 6.1 TL5 sp9 + VIOS packages

- VIOS 3.1 based on AIX 7.2 TL3 sp2

- An AIX 6.1 to AIX 7.2 upgrade requires a complete disk reformat and overwrite installation.

- The AIX upgrade is similar to upgrading from VIOS 2 to VIOS 3

“Traditional” Upgrade - the high-level process

All VIOS upgrades (VIOS 2 to VIOS 3) involve

- Back up the VIOS metadata and configuration

- Save the backup remotely

- Back up any non-VIOS application data files - save to a remote place

- Are the LPAR disk files in the rootvg file system?

- Warning easy to forget the vDVD Optical Library, any LPAR virtual disks based on rootvg LV or file-backed

- Install from VIOS 3.1 mksysb? This operation rebuilds the rootvg and file systems

- Possibly on an alternative disk

- Get the VIOS backup & restore the metadata and configuration

- Reinstall any non-VIOS application code and data

- If the VIOS is using the SSP, then extra steps are required

Note: various VIOS or NIM commands automate some parts of the process.

NIM and new upgrade commands

- Preparation: NIM server must be at AIX 7.2 TL3 sp2+

- Two New commands to help us upgrade

- The command viosupgrade. There’s two commands with the same name!

- On NIM server (when you update to AIX 7.2 TL3 sp1+)

- On VIOS (when you update to VIOS 2.2.6.51+)

- Each has a different syntax.

IBM Docs Manual pages

- Top Level of the IBM Docs page for VIOS migration:

https://www.ibm.com/docs/en/power9?topic=migrating-virtual-io-server-by-using-viosupgrade-command - Vague Introduction to the installations methods https://www.ibm.com/docs/en/power9?topic=command-methods-upgrading-virtual-io-server

- Non

-SSP (Shared Storage Pool ) https://www.ibm.com/docs/en/power9?topic=command-upgrading-virtual-io-server-non-ssp-cluster - SSP (Shared Storage Pool

) https://www.ibm.com/docs/en/power9?topic=command-upgrading-virtual-io-server-ssp-cluster - Migration levels https://www.ibm.com/docs/en/power9?topic=command-supported-virtual-io-server-upgrade-levels

- Miscellaneous https://www.ibm.com/docs/en/power9/9040-MR9?topic=command-miscellaneous-information-about-upgrading-virtual-io-server

- Unsupported

https://www.ibm.com/docs/en/power9/9080-M9S?topic=command-unsupported-upgrade-scenarios-vios-upgrade-tool -

What's new in Virtual I/O Server commands https://www.ibm.com/docs/en/power9?topic=server-whats-new-in-virtual-io-commands

- Virtual I/O Server release notes – including installation from USB Memory device or Flash device key

https://www.ibm.com/docs/en/power9?topic=POWER9/p9eeo/p9eeo_ipeeo_main.htm- USB Memory key or Flash key installation

- Duff minimum size for a VIOS

- VIOS viosupgrade command in VIOS 2.2.

6.LATEST - NIM viosupgrade command on the NIM AIX 7.2 TL3 + sp

- https://www.ibm.com/docs/en/aix/7.2?topic=v-viosupgrade-command

- That one is hard to find – it is in the AIX commands reference for AIX Commands of AIX 7.2

iSCSI starter pack

- iSCSI intro manual pages

- https://www.ibm.com/docs/en/aix/7.2?topic=protocol-iscsi-software-initiator-software-target

- Configuring iSCSI software initiator

- Configuring the iSCSI software target

- iSCSI software initiator considerations

- iSCSI software target considerations

- iSCSI hints look at these manual pages

- lsiscsi

- mkiscsi

- chiscsi

- rmiscsi

Download the VIOS 3.1 installation software

- Upgrade (Install) images come from ESS & you have to prove entitlement = PowerVM license/support

- ESS = Entitled Systems Support at IBM ESS at https://www.ibm.com/servers/eserver/ess/index.wss

Preparing for NIM

- Getting hold of the mksysb file is a pain!

- You need to extract the mksysb image from the .iso

- Just to make life interesting it is in 2 parts one on each of the DVDs

- Use the cat command to join them together

- End up with 3.1.0.0 then have to manually add the service pack 3.1.0.10

- Flash image is already at level 3.1.0.10 (note level 3.1.0.10 includes the first service pack)

- I could not extract the mksysb by loopmounting the .iso on AIX

- Errors: “can’t open the readable file!”

- But I could extract on a Linux loop-mount (Ubuntu 18.04 on Power)

- UPDATE: The flash .iso is udfs format (for USB memory key or thumb drive) and not the older CD/DVD cdrfs format.

Thanks to Marc-Eric Kahle/Germany/IBM and FrankKruse

New note: this udfs option is a new-ish AIX feature my AIX 7.1 TL2 can't do mount udfs format but my AIX 7.2 TL3 can-

loopmount -i VIOS_31010_Flash.iso -m /mnt -o "-V udfs -o ro"

-

Script to extract the one Flash DVD .iso mksysb's (at your own risk = don't blindly run this command)

# On AIX 7.2 TL3 or above (definitely fails on AIX 7.1 TL2) # Note: udfs for a Flash image loopmount -i flash.iso -o "-V udfs -o ro" -m /mnt DIR=/usr/sys/inst.images ls –l /mnt/$DIR/mksysb_image # display the file cp /mnt/$DIR/mksysb_image VIOS31_mksysb_image

Script to extract the two DVDs .iso to make a single mksysb (at your own risk = don't blindly run this command)

# Assuming the DVDs are in this directory and with these names DVD1=/tmp/dvdimage.v1.iso DVD2=/tmp/dvdimage.v2.iso mkdir /mnt1 mkdir /mnt2 df # to show they got mounted OK # Watch out for those double quotes and “-” characters. Note: cdrfs for a DVD loopmount -i $DVD1 -o "-V cdrfs -o ro" -m /mnt1 loopmount -i $DVD2 -o "-V cdrfs -o ro" -m /mnt2 DIR=/usr/sys/inst.images ls –l /mnt1/$DIR/mksysb* /mnt2/$DIR/mksysb* # display the files cat /mnt1/$DIR/mksysb* /mnt2/$DIR/mksysb* >VIOS31_mksysb_image umount /mnt1 umount /mnt2

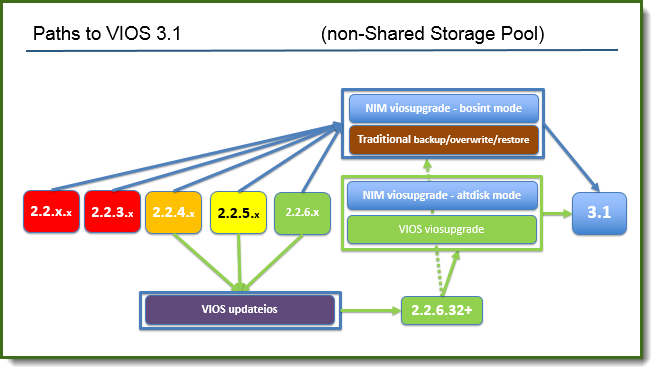

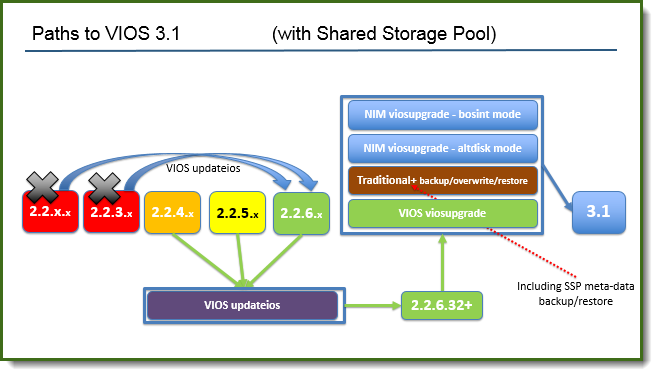

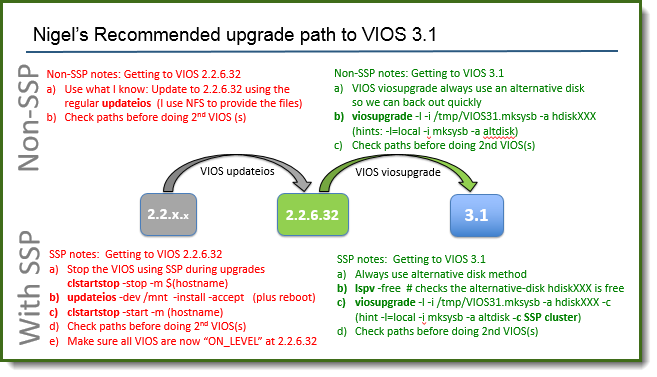

Pathways to VIOS 3.1

VIOS viosupgrade command

- Read the manual page on IBM Docs

- Mandatory: an unused disk and VIOS 2.2.6.LATEST

$ viosupgrade -l -i image_file -a mksysb_install_disk [ -c ] [-g filename ]

First parameter is a lowercase L for “local”

For humans:

- Non-SSP:

viosupgrade -l -i /tmp/vios31.mksysb -a hdisk33 -g /tmp/filelist - With SSP:

viosupgrade -l -i /tmp/vios31.mksysb -a hdisk33 -g /tmp/filelist -c

- -g file of file names that get copied for you to the new disk = neat!

- -c = cluster = Shared Storage Pool

- List the state of the upgrade with:

viosupgrade -l -q

For altinst_rootvg "already exists" errors

These commands are useful:

-

# alt_rootvg_op -X altinst_rootvg Bootlist is set to the boot disk: hdisk0 blv=hd5

Or

-

$ exportvg altinst_rootvg $ importvg -vg rootvgcopy hdisk1

For disk busy of in use errors

Be careful - it might be true!

These commands are useful.

List all the disks and repository in use by a Shared Storage Pool.

Do not fiddle or use these disks.

$ lscluster -d

List the disks that are definitely not in use.

$ lspv -free

ODM could be tracking their previous use or the disk header is indicating its use

If you have POWER9 NVME, try

$ lspv -unused

Options are (as the root user) zap the front of the disk (safer than dd command).

# cleandisk -?

Read and you decide how to screw up your disks!

Also, worth knowing about

# chpv –C hdisk3

Key:

- -C option: Clears the owning volume manager from a disk. This flag is only valid for the root user. This command could fail to clear LVM as the owning volume manager if the disk is part of an imported LVM volume group.

Regression Test – for the virtual networks & virtual disks

This section generates the details that you need to check.

The initial list is the

- Virtual disks:

lsmap -all - Virtual networks:

lsdev | grep SEA ; lsdev -attr -dev <your-SEA's> - The VIOS it is on the network and IP settings: as root

ifconfig -a - Useful scripts that system admin team use (like my 'n tools' for SSP,

- Set up the automatic viosbr back-up, sending the backups to a remote server,

- Note the padmin users and password.

Get the important files moved over for you

VIOS viosupgrade command has a nice -g filename option. This file contains full file names of files that you would like it to copy to the alternative disk once installed with VIOS 3.1. This section saves time later.

The files get copied to /home/padmin/backup_files/<then the full file name you requested>

Here are my suggestions

- /etc/motd

- /etc/environment

- /etc/netsvc.conf

- /etc/resolv.conf

- /etc/hosts

- /etc/inittab

- /etc/ntp.conf

- /etc/group

- /etc/passwd

- /etc/security/passwd

Output of the following commands:

For padmin user and for the root user:

crontab -l

lsmap -all lsdev lsdev -dev

for i in $(lsdev -dev hdisk* -field name) # disks and attributes do echo ========== $i lsdev -dev $i -attr done for i in $(lsdev -dev en* -field name) # networks and attributes do echo ========== $i lsdev -dev $i -attr done

If you have Nigel's SSP tools:

- /home/padmin/ncluster

- /home/padmin/nlu

- /home/padmin/nmap

- /home/padmin/npool

- /home/padmin/nslim

Save any nmon or topas output files to compare later for a performance check

tar cvf /tmp/var_perf_daily.tar /var/perf/daily/*

Double check for any local useful script in:

- /home/padmin

- /usr/lbin

Not for use but for reference:

- /etc/tunables/nextboot

- /etc/tunables/lastboot

With an SSP

Make sure you have a running SSP cluster to join back too after you VIOS viosupgrade each VIOS.

NIM viosupgrade

To perform the bosinst type of upgrade operation, use the following syntax:

viosupgrade -t bosinst -n hostname -m ios_mksysbname -p spotname {-a RootVGCloneddisk: ... |

-r RootVGInstallDisk: ...| -s} [-b BackupFileResource] [-c] [-e resources: ...] [-v]

To perform the altdisk type of upgrade operation type the following command:

viosupgrade -t altdisk -n hostname -m ios_mksysbname -a rootvgcloneddisk [-b BackupFileResource] [-c]

[-e resources: ...] [-v] KEY -t bosinst (overwrited the rootvg)

-t altdisk (make rootvg on a new alternative disk and leaves the rootvg untouched and called old_rootvg)

-n redvios2 -m VIOS31_mksysb -p VIOS31_spot {-a RootVGCloneddisk: ... | -r RootVGInstallDisk: ...| -s}

[-b BackupFileResource] [-c] [-e resources: ...] [-v] -c

This is a SSP VIOS -v Validate the install parameters and VIOS state

On the NIM server

viosupgrade -v

Key: v = validate

Nice feature to confirm that you have everything set up right before the upgrade.

It makes a dozen checks or so and the details are saved in a log file.

# viosupgrade -v -t altdisk -n redvios2 -m vios31010_mksysb -a hdisk3 -c Welcome to viosupgrade tool. Triggered validation.. Check log files for more information, Log file for 'redvios2' is: '/var/adm/ras/ioslogs/redvios2_10289506_Fri_Nov_16_10:29:13_2018.log'. Please wait for completion.. ----------------------------------- Validation successful for VIO Servers: redvios2 ----------------------------------- # viosupgrade -v -t bosinst -n redvios2 -m vios31010_mksysb -p vios31010_spot -a hdisk3 -c Welcome to viosupgrade tool. Triggered validation.. Check log files for more information, Log file for 'redvios2' is: '/var/adm/ras/ioslogs/redvios2_10289514_Fri_Nov_16_10:41:06_2018.log'. Please wait for completion.. ----------------------------------- Validation successful for VIO Servers: redvios2 ----------------------------------- #

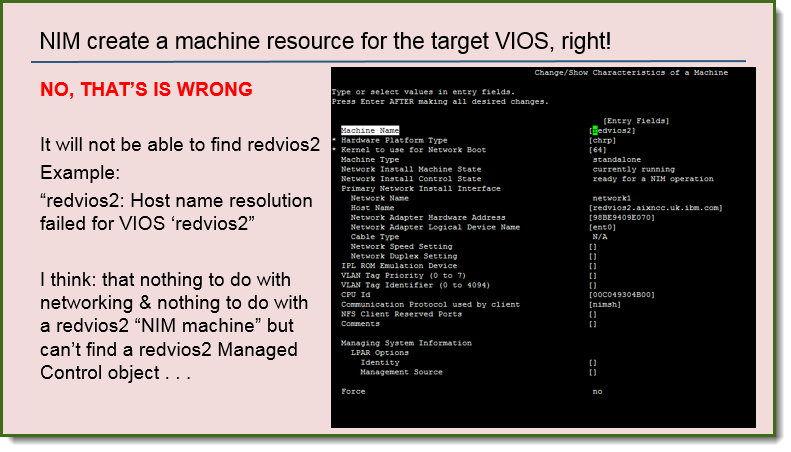

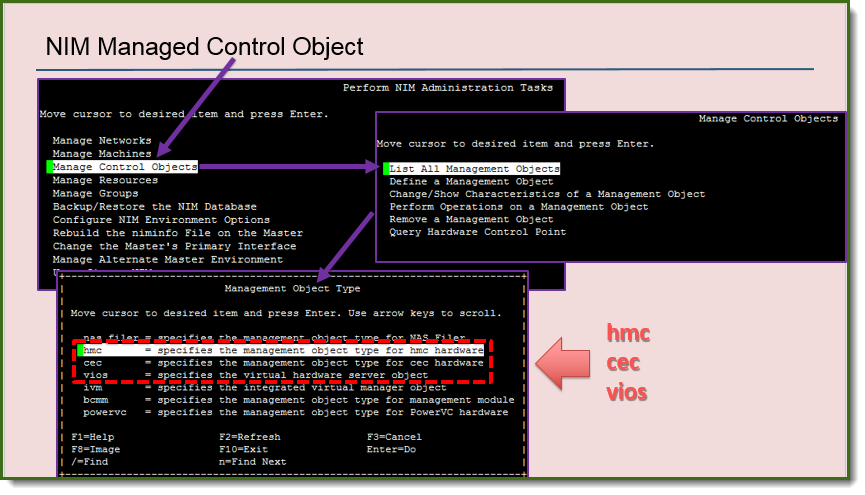

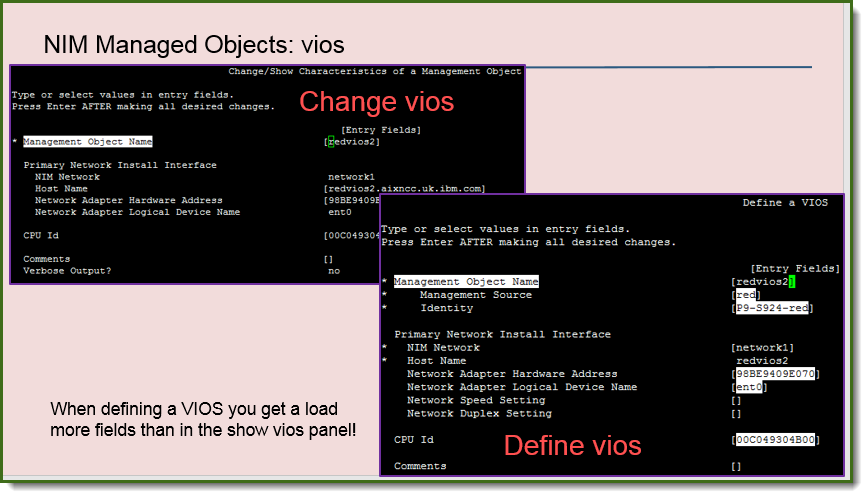

NIM Resources Required for the VIOS

On a regular NIM install of a new VIOS, you create a NIM machine for the VIOS. That does not work for a VIOS upgrade.

You need to define a Managed Resources:

- HMC,

- CEC (server) and

- The VIOS as a VIOS (not a NIM machine resource)

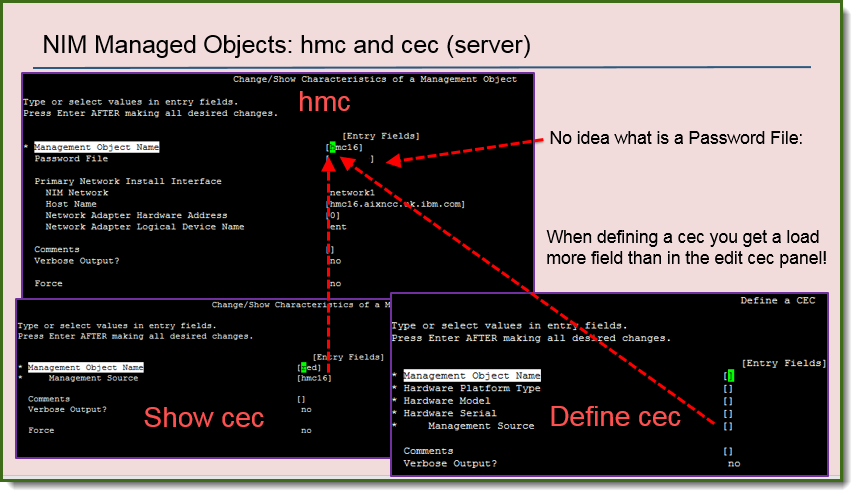

UPDATE: Comment on the following statement: "No idea what a Password file is?

- Well I have a friend that does called Marc-Eric Kahle in Germany

-

This Password file is a NIM feature to allow remotely getting a console from the NIM server. Not tried this method myself yet.

-

You need to install DSM.core on the

and run:

and run:# dpasswd -f /export/nim/passwd/7063CR1hmc_passwd -U hscroot -P abc1234 Password file is /export/nim/passwd/7063CR1hmc_passwd Password file created. -

Download dsm.core?

See this link for details: http://www-01.ibm.com/support/docview.wss?uid=isg1fileset337201615

To get a VIOS adopted but a NIM server, on the VIOS as padmin run

-

remote_management [ -interface Interface ] NIMmaster remote_management -disable -

To enable remote_management by the

(here called nim32), type:

(here called nim32), type: -

$ remote_management -interface en0 nim32 nimsh:2:wait:/usr/bin/startsrc -g nimclient >/dev/console 2>&1 0513-059 The nimsh Subsystem has been started. Subsystem PID is 11337892. - To disable remote_management, type:

-

remote_management -disable

The NIM viosupgrade command can find the name VIOS as a NIM resource and not a NIM machine.

Log files - just in case

- NIM viosupgrade details the log file that it is using

-

viosupgrade -l -q - This command outputs the current or final state

- Log files for debug purpose:

- On NIM server: viosupgrade command from NIM

- viosupgrade Command logs: /var

/adm /ras /ios logs / * - NIM Command Logs: /var/adm/ras/nim*

- viosupgrade Command logs: /var

- On VIOS: viosupgrade

- viosupgrade Command logs: /var

/adm /ras /ios logs / * - viosupgrade Restore logs: /home/ios/logs/viosup_restore.log

- viosupgrade Restore logs: /home/ios/logs/viosupg_status.log

- viosupgrade Command logs: /var

-

- viosbr Back up Logs: /home/ios/logs/backup_trace*

- viosbr Restore Logs: /home/ios/logs/restore_trace*

- On NIM server: viosupgrade command from NIM

Fifth method: Disruptive VIOS 3.1 upgrade with SSP

The alternative Whole SSP down method Highly Disruptive! Not for Production

- Upgrade to VIOS 2.2.6.<latest-version>

- On each VIOS: viosbr -backup - Save the files off the VIOS

- Stop ALL LPARs

- Stop ALL VIOS in the entire pool

- Then, for each SSP VIOS in turn

- Upgrade VIOS with complete overwrite

- viosbr -recover -> including SSP backup

Nigel’s Ultra blunt opinion about Production Upgrades

- In my humble opinion:

- Fresh installation VIOS 3.1 for new servers – Do not upgrade your Production to VIOS 3.1 until mid Q1 2019 to allow any bug + fixes to arrive.

- Why?

- Messing up your Production VIOS & its LPARs is painful

- In the meantime run tests on:

- The upgrade process

- Prepare to save and later reinstall your non-VIOS applications & data

- New features of VIOS 3.1 – in particular the new iSCSI features (if you like iSCSI)

- I am sure once at VIOS 3.1 that it all works fine

VIOS Tuning Options

- Any AIX 6.1 tuning option disappears during the installation and upgrade

- So all the old mistakes are wiped out = a good thing!

- AIX development decided a reset the tuning defaults in AIX 7.1 & 7.2 for best performance

- AIX 7.2 tuning options are different and more of them

- AIX6.1 tuning does NOT apply to AIX 7.2

- Do not apply your old VIOS 2 tune-up script on VIOS 3

- You may:

- Monitor performance for a week to double check

- Run the VIOS advisor: “part command” to see what it suggests

- If you have a problem: Raise a PMR - have a VIOS snap and perfPMR ready

- Don’t start randomly adding AIX 6 tuning

Summary: VIOS 3.1 Upgrade in practice – in general

- New Features: AIX 7.2, RAS, performance + iSCSI LUN to vSCSI

- Nice to have but not massive functional differences 2.2.6.<latest-version> to 3.1

- Everything works the same as before

- Clean slate: fresh overwrite installation VIOS flushes out the “crufty”

- It is your job to handle the extra applications (code & data)

- You run Dual VIOS for RAS & to make upgrades simple

- Don’t upgrade at peak loads

- Think about a simple regression test before you start.

- "Backup, backup, and backup"

- At least two of the following methods: disk clone, mksysb of the whole VIOS, viosbr, alternative disk installation

- Upgrading any production server - always practice before you start.

Summary: VIOS 3.1 Upgrade in practice – on the day

- Don’t do it – fresh installation a new server or evacuate old servers first

- Regardless of the method: viosbr -backup & save the file off the VIOS

- Always install to an alternate disk

- Don’t forget the non-VIOS apps (code and data) and rootvg virtual disk or virtual DVD

- Preferred methods [[in Nigel’s opinion]]

- For SSP users, always use method b)

- updateios To version 2.2.6.<latest-version>, then NFS the VIOS 3.1 mksysb to the target VIOS & run VIOS vios

upda t e - Traditional Manual still a good method: back up, then scratch installation of the VIOS by using DVD, USB flash drive, HMC, NIM, and recover metadata

- NIM viosupdate -bosinst (for SSP get to 2.2.6.<latest-version> first)

- NIM viosupdate -altdisk - Note: not tested successfully by Nigel yet (out of time)

- Run the regression test commands & compare with the saved output

Post upgrade Checks - NEW SECTION

On the VIOS - Blue parts are new and particularly for SSP users.

- Check the VIOS time zone with cfgassist set it and you have to reboot.

- Check the VIOS date - set it with

cfgassist - Set up any network time protocol server (ntp) service.

- Set up /etc/netsvc.conf especially.

- When using SSP, a missing DNS can stop SSP communication, to search locally for the hostname before DNS for example:

-

hosts = local, bind

-

- Set up /etc/hosts, when SSP, include all the VIOS members of the SSP.

- Check the new VIOS level:

ioslevel - Check the paging space size and layout: (as the root user)

lsps -a - Check file system sizes - some like /tmp and /home can be back to the default size:

df -g - Check users: (as the root user)

lsuser ALL - Your original Virtual Optical Library is gone - you need to re-create it and get the .iso images restored

- Check the tuning options: (as the root user):

-

ioo -L lvm -L nfso -L no -L raso -L schedo -L vmo -L

-

On your VIOS client LPARs (virtual machines):

- Check your disk paths:

lspath- Important to do this BEFORE upgrading your second VIOS of a pair

- If not enabled, enable your paths as the root user

lspath | awk '{print "chpath -s enable -l " $2 " -p " $3 }' | ksh

New section: Hard-won experience

Disk Renumbering

- The hdisk order in AIX and VIOS is determined by the order of discovery at the initial installation time and later added disk get higher hdisk numbers

- VIOS 3.1 is a fresh installation

- So if you added disks after the initial VIOS 2 installation time - expect the hdisks to be reordered during the upgrade

- The viosupgrade command knows this and deals with the reorder issue but you could have a shock like you asked the command to use alternative disk hdisk38 but it becomes hdisk6

- If you (for example) run viosupgrade -l -a hdisk2 . . .

- Once you boot the VIOS 3.1, the old hdisk2 could be still hdisk 2, or maybe a different hdisk number - this behavior is expected.

- Unless you have a simple low number of internal disks from the original install and no update history - expect a different hdisk number.

VIOS mirror break, upgrade, and remirror

- Many of my VIOS 2 had rootvg mirrored. Breaking the mirror, gives you an alternative disk to install on too

- VIOS unmirrorios hdiskX

- Note the named hdiskX is the disk, which remains in use

- Removed the empty disk from rootvg (cfgassist) and then upgraded to VIOS 3.1 naming the unused disk as the alternative disk for the VIOS 3.1 installation

- When happy that VIOS 3.1 is working OK, remirror by using the mirrorios command

- I have many problems as I get "that disk is in use"

- I tried the cleandisk, chpv -C hdiskX, create a VG on it and remove the VG and so on, but it is not working - create a PMR with IBM Support

VIOS with SSP pairs

- If you have one VIOS running SSP of a pair currently being upgraded and then run the VIOS viosupgrade -l . . .on the second VIOS, I get

"Cluster state in not correct" (including the typographical error). - The translation is: You can't seriously want both VIO Servers of this pair to be down at the same time!

- It is a good check but if you evacuated the server to upgrade the VIOSs, it means you can't do both at the same time.

- As the viosupgrade take about 10 to 15 minutes on fast disks (SSD or NVMe) - it is not too bad. If the first upgrade fails for some reason, at least you get to fix it and learn before you fail the 2nd VIOS.

- Wait until the first VIOS is upgraded, rebooted, and operating in the SSP cluster again.

When you get to VIOS 3.1, I fully expect it to be Rock Solid as normal for new VIOS releases

Questions and Answers - During the Upgrading to VIOS 3.1 Webinar

- This section: not officially reviewed.

- What about application certifications? Is VIOS 3.1 certified by SAP HANA?

- Ask SAP or the application vendor about their certification.

- It is not IBM's responsibility and IBM does not make statements on their behalf.

- Is it still possible to back up VIOS 3.1 using backupios and mksysb and then restore with the NIM (I assume NIM on AIX 7.2 is required)?

- Yes.

- On VIOS 2.2, because it is based on AIX 6.1 it uses SMT-4 (if available on your hardware). Is it beneficial for VIOS 3.1 to enable SMT 4 or even 8 on POWER9?

- Yes.

- The SMT number is set automatically. There is no need to fiddle.

- With VIOS 3.1+ on POWER9, it uses SMT=8.

- Why was IVM removed?

- There is little remaining use of IVM with clients.

- Sure there are some users that liked IVM (for example in the POWER5 days like the p505 1U server).

- IBM can't justify the development and testing costs.

- Some people think IBM is a bottomless pit of money and people time - it is not true.

- We understand IVM users, love it and it is fast.

- Upgrade to the latest VIOS 2.2.6.<latest-version>. Wait 3 years (with support) and then power off the server. It is probably 8 years old by then and overdue for retirement and running unsupported everything.

- Do I need any special license to use VIOS 3.1 on servers where I am currently using VIOS 2.x?

- Your PowerVM license covers the Hypervisor and VIOS and is not version-specific, so you are entitled.

- Is VIOS IFL compatible for POWER 980?

- VIOS is AIX so it does not use IFLs.

- As far as I know, you can have a 100% IFL enabled server and run Linux LPARs as well as VIOS LPARs on it.

- The VIOS, though based on AIX, are not considered AIX LPARs for IFL usage. The VIOSs are in their separate own category.

- I have dual VIOS server (lets say A and B). I want to avoid upgrading (as it is problematic) but I have no LPM nor possibility to create move VIOS partition (due to hardware). I would like to do a fresh installation of VIOS 3.1 on VIOS B. Is it possible to set up SEA failover/NPIV/maybe SSP as on the previous installation so there would be mixed VIOS versions (A - 2.X and B - 3.1) for some time for testing purposes? Is it possible from support and technical point of view?

- Yes, that all works fine.

- Does VIOS 3.1 support vNIC?

- Yes.

- Is LDAP supported on VIOS?

- Yes and not changed with VIOS 3.1

- You have to save the LDAP configuration and recover that yourself but that is like what you do for each new server and new pair of VIOSs.

- The "flash" version has the full mksysb?

- Correct

- If installing from an HMC, can you use the "flash" .iso?

- Yes, you can use the flash .iso using an HMC

- I can't think of a reason why that would not work. BTW - if you plug a USB device into an HMC, you can use the lsmediadev CLI command to see where it is mounted.

- Is it possible to do "alternative disk" installation on and more disk; so I can quickly boot back to 2.2.x?

- Correct. Done by hand or by using any of the three viosupgrade options.

- Don't get confused by the NIM viosupgrade -t altdisk = that -t option name is confusing.

- Before upgrading the VIOS to 3.1, is there any compatibility check we need to do with respect for the SAN?

- VIOS 2.2 and VIOS 3.1 uses the SAN in the same way.

- Does this alternative disk installation use a caching disk on the NIM server or NFS - or do we have the choice

- We are not familiar with the "caching disk" method.

- We use the standard NIM method of pushing an mksysb and spot to the client LPAR.

- If I choose NIM viosupgrade -bosint mode, is "Rollback" possible?

- Yes, you have an alternative disk fallback. Use the viosupgrade -bosint with the -a hdiskX option saves the original VIOS disk as a fallback option.

- Apparently, viosupgrade command is in 2.2.6.<latest-version>and running it upgrades the VIOS to 3.1 and AIX to 7.2. So somehow those ~5G of mksysb for fresh installation is in fact inside 2.2.6.<latest-version>itself? Is my question clear?

- Your question is as clear as mud. VIOS 3.1 installation image downloaded from ESS includes AIX 7.2. Just like VIOS 2.2 installation image includes AIX 6.1.

- In VIOS 2.2.6.<latest-version>, you have the viosupgrade command binary but no VIOS 3.1 installation image.

- You have to download the VIOS 3.1 .iso image, extract the mksysb and hand the mksysb file to the viosupgrade command.

- Based on the list of files Nigel's example copied with the -g, I'm expecting that NTP configuration wouldn't be preserved by default either?

- Correct. It is exactly like overwriting the rootvg and recovered the VIOS metadata - that is exactly what it did. Everyone needs to have clear documentation on the additional setup they use . . . because you have to do that for every new POWER server.

- I read in a doc that after upgrade to VIOS 3.1 through "viosupgrade" tool, disk attributes like "reserve_policy" will be set to "no_reserve" and "queue_depth" will be set to "1". If "viosupgrade" tool reboots VIOS at this situation, disk SCSI reservations affects client LPARs and client LPAR ends up in a hung state. How to overcome this situation?

- UPDATE: We checked - the viosbr backup of the metadata seems to include these settings.

- The first boot does not have the settings. Later the backup is restored - including the settings

- The Second boot means that the settings are active.

- So VIOS IP on VLAN is OK, even if VLAN is on the SEA?

- We are not encouraging IP addresses on the SEA.

- VIOS best practice is to have 2 virtual Ethernet adapters per Virtual network in the VIOS.

- One is used to create the SEA (bridge)

- The other one has the IP address on it (for access).

- This configuration increases performance.

- As for the iSCSI on VIOS - wouldn't it be better to use iSCSI LUNs on LPARs directly (AIX/Linux) rather than iSCS

I-VI OS-v scsi -Cli ent?

vSCSI would introduce more code layers and could impact performance IMHO. It is like vSCSI vs NPIV.- iSCSI on the VIOS makes the VIOS client a regular generic simpler vSCSI client with no iSCSI hardware requirements.

- IMHO vSCSI has minimal impact.

- You sound like an iSCSI guru - we need to learn more about iSCSI ourselves.

- Is there any way to install automatically any 3rd party MPIO during viosupgrade

- Nope. I guess NIM guru's with a NIM viosupgrade - could supply a script to do that.

- The trend for years is moving to AIX MPIO.

- Do we need to download and extracted anything (other than the VIOS 2.2.6.<latest-version>) to run the viosupgrade?

- The VIOS viosupgrade command arrives in the VIOS 2.2.6.<latest-version> update.

- There is nothing else to download.

- How to get from the VIOS installation ISO to mksysb 3.1

- See the section "Script to extract the two DVDs .iso to make a single mksysb" - which includes extracting it from the Flash VIOS installation images

- Password for padmin user is reset when upgrade? And reset when restored?

- The padmin password is not in the VIOS metadata backup.

- I just set it on the first login prompt to the previously used password.

- Is the VIOS 3.1 default SMT8?

- Can't imagine why you need to know.

- It is set by the rules the rules for VIOS 3.1. It is set to SMT=8 on my POWER9 server.

- Older POWER hardware that does not support SMT=8 is set depending on the hardware.

- What are the IVM replacement options?

- HMC or a virtual HMC.

- Is there is any compatibility with PowerVC?

- PowerVC communicates with the VIOS using the HMC - all current HMC versions are supports with PowerVC and VIOS.

- Is the IBM Tivoli Monitoring config preserved?

- The viosbr backup command does not include IBM Tivoli Monitoring configuration or data

- Save that data and restore it yourself. This is the same as installation of a new server and new pair of VIOS.

- Or if you are not worried about missing data, follow your fresh installation of VIOS procedure.

- Maybe the command rulescfgset set rules after installation to 3.1

- Yes it sure does - just like on VIOS 2.2

- Is this mksysb image created from latest VIOS3.1 image

- Yes it is extracted from the VIOS 3.1 installation .iso image

- Does it have any HMC minimal version?

- Nope.

- The HMC is dependent much more on the Server system firmware.

- Is there a system firmware minimum level requirements before upgrading to VIOS 3.1?

- Nope but always use the latest firmware always or n-1 version if you conservative.

Additional Information

Other places to find content from Nigel Griffiths IBM (retired)

Document Location

Worldwide

Was this topic helpful?

Document Information

Modified date:

07 July 2023

UID

ibm11114113