Technical Blog Post

Abstract

This report describes the test configuration, scenarios, and results in rows-per-second throughput for replicating data from a Db2 for z/OS database into a Kafka landing zone. The source engine is running CDC for z/OS on z/OS and the target is running CDC for Kafka on x8_64 RHEL.

Body

Test Configuration

14aLite test parameters

-DROWS=10000000 -DSUBSCRIPTIONS=1 -DTABLES_PER_SUB=1 -DCOMMIT=100 -DPHASES=I -DMANIPULATE_AND_MIRROR=SEQUENTIAL -DTABLEDEF=ZKAFKA -DCONCURRENCY=65 -DFAST_APPLY_THRESHOLD=10000 -DFAST_APPLY_CONNECTIONS=10

Target engine system parameters

global_max_batch_size=100000

target_queue_max_unit_of_work=1024

target_queue_max_unit_of_work_refresh=1024

target_num_image_builder_threads=16

The 14aLite is a CDC workload generator that creates the subscriptions and tables and runs replication. For this test, 10 million rows were inserted. The 10 million rows were split evenly between subscriptions, with one table per subscription. Sixty-five transactions were populated concurrently with a size of 100 rows per transaction.

The following create statement was used for the source table with 10 million rows.

create table LEMON.TB29132PD0 ( CUSTNO decimal(10) not null, STATE char(2), CUST_INSIGHT_ID char(20) not null, SEQ_NO integer not null, ROLEPLAYER char(20) not null, ROLEPLAYER_TYPE char(4) not null, ROLEPLAYER_PTY_ID char(20) not null, ROLEPLAYE_PTY_TYPE char(4) not null, ZONENO decimal(5, 0) not null, EXTERNALSYSTEMID char(10) not null, INSIGHT_END_DATE date not null, APPRAISE_FLAG1 decimal(3, 0) not null, APPRAISE_FLAG2 decimal(3, 0) not null, APPRAISE_FLAG3 decimal(3, 0) not null, APPRAISE_FLAG4 decimal(3, 0) not null, APPRAISE_FLAG5 decimal(3, 0) not null, APPRAISE_FLAG6 decimal(3, 0) not null, APPRAISE_FLAG7 decimal(3, 0) not null, APPRAISE_FLAG8 decimal(3, 0) not null, APPRAISE_FLAG9 decimal(3, 0) not null, APPRAISE_FLAG10 decimal(3, 0) not null, APPRAISE_FLAG11 decimal(3, 0) not null, APPRAISE_FLAG12 decimal(3, 0) not null, APPRAISE_FLAG13 decimal(3, 0) not null, APPRAISE_FLAG14 decimal(3, 0) not null, APPRAISE_FLAG15 decimal(3, 0) not null, APPRAISE_FLAG16 decimal(3, 0) not null, APPRAISE_FLAG17 decimal(3, 0) not null, APPRAISE_FLAG18 decimal(3, 0) not null, APPRAISE_FLAG19 decimal(3, 0) not null, APPRAISE_FLAG20 decimal(3, 0) not null, APPRAISE_VALUE1 char(10) not null, APPRAISE_VALUE2 char(10) not null, APPRAISE_VALUE3 char(10) not null, APPRAISE_VALUE4 char(10) not null, APPRAISE_VALUE5 char(10) not null, APPRAISE_VALUE6 char(10) not null, APPRAISE_VALUE7 char(10) not null, APPRAISE_VALUE8 char(10) not null, APPRAISE_VALUE9 char(10) not null, APPRAISE_VALUE10 char(10) not null, APPRAISE_DATA1 decimal(17, 0) not null, APPRAISE_DATA2 decimal(17, 0) not null, APPRAISE_DATA3 decimal(17, 0) not null, APPRAISE_DATA4 decimal(17, 0) not null, APPRAISE_DATA5 decimal(17, 0) not null, APPRAISE_DATA6 decimal(17, 0) not null, APPRAISE_DATA7 decimal(17, 0) not null, APPRAISE_DATA8 decimal(17, 0) not null, APPRAISE_DATA9 decimal(17, 0) not null, APPRAISE_DATA10 decimal(17, 0) not null, APPRAISE_DATA11 decimal(17, 0) not null, APPRAISE_DATA12 decimal(17, 0) not null, APPRAISE_DATA13 decimal(17, 0) not null, APPRAISE_DATA14 decimal(17, 0) not null, APPRAISE_DATA15 decimal(17, 0) not null, APPRAISE_DATA16 decimal(17, 0) not null, APPRAISE_DATA17 decimal(17, 0) not null, APPRAISE_DATA18 decimal(17, 0) not null, APPRAISE_DATA19 decimal(17, 0) not null, APPRAISE_DATA20 decimal(17, 0) not null, APPRAISE_DATA21 decimal(17, 0) not null, APPRAISE_DATA22 decimal(17, 0) not null, APPRAISE_DATA23 decimal(17, 0) not null, APPRAISE_DATA24 decimal(17, 0) not null, APPRAISE_DATA25 decimal(17, 0) not null, APPRAISE_DATA26 decimal(17, 0) not null, APPRAISE_DATA27 decimal(17, 0) not null, APPRAISE_DATA28 decimal(17, 0) not null, APPRAISE_DATA29 decimal(17, 0) not null, APPRAISE_DATA30 decimal(17, 0) not null, APPRAISE_DATA31 decimal(17, 0) not null, APPRAISE_DATA32 decimal(17, 0) not null, APPRAISE_DATA33 decimal(17, 0) not null, APPRAISE_DATA34 decimal(17, 0) not null, APPRAISE_DATA35 decimal(17, 0) not null, APPRAISE_NOTES1 varchar(60) not null, APPRAISE_NOTES2 char(60) not null, APPRAISE_DATE1 date not null, APPRAISE_DATE2 date not null, APPRAISE_DATE3 date not null, APPRAISE_DATE4 date not null, APPRAISE_DATE5 date not null, APPRAISE_DATE6 date not null, APPRAISE_DATE7 date not null, APPRAISE_DATE8 date not null, APPRAISE_DATE9 date not null, APPRAISE_DATE10 date not null, APPRAISE_DATE11 date not null, APPRAISE_DATE12 date not null, APPRAISE_DATE13 date not null, APPRAISE_DATE14 date not null, APPRAISE_DATE15 date not null, CREATED_TS timestamp not null, UPDATED_TS timestamp not null, CREATED_DATE date not null, UPDATED_DATE date not null, CREATED_ZONENO decimal(5, 0) not null, CREATED_BRNO decimal(5, 0) not null, UPDATED_ZONENO decimal(5, 0) not null, UPDATED_BRNO decimal(5, 0) not null, CREATED_BY char(16) not null, UPDATED_BY char(16) not null, CREATED_PGM char(8) not null, UPDATED_PGM char(8) not null, CREATED_SYSTEM_ID integer not null, UPDATED_SYSTEM_ID integer not null, TEST1 char(30) not null, primary key( CUSTNO )) in DBGW01.TBSPGW01 data capture changes

Test scenarios

Scenario one: one subscription, one table

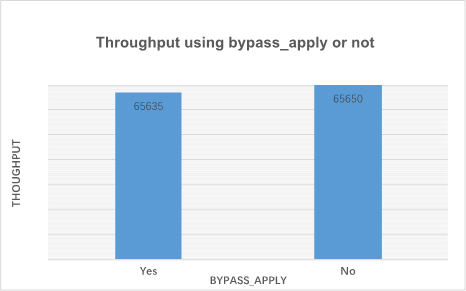

BYPASS_APPLY=false (no) VS BYPASS_APPLY=true (yes)

Note: BYPASS_APPLY means whether data will be inserted into Kafka or not. True means no data will be applied to Kafka.

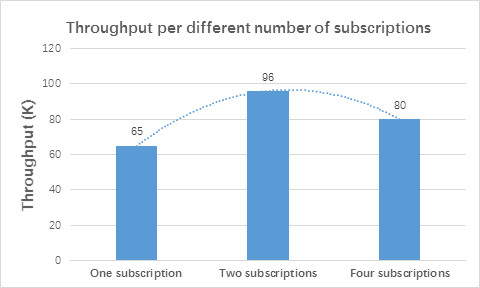

Scenario two: two subscriptions, one table per subscription

Average throughput per subscription: 47,841/48,212 rows per second (RPS).

Scenario three: four subscriptions, one table per subscription

Average throughput per subscription: 20,559 rows per second (RPS).

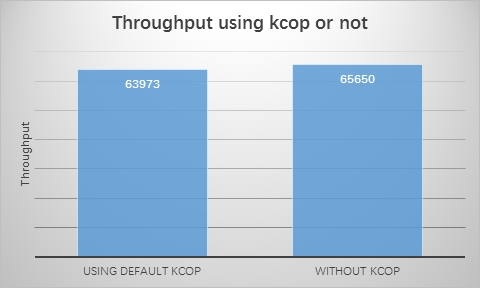

Scenario four: one subscription, one table using KcopDefaultBehaviorSample

-DROWS=10000000 -DSUBSCRIPTIONS=1 -DTABLES_PER_SUB=1 -DCOMMIT=100 -DPHASES=I -DMANIPULATE_AND_MIRROR=SEQUENTIAL -DCONCURRENCY=65 -DFAST_APPLY_THRESHOLD=10000 -DFAST_APPLY_CONNECTIONS=10 -DTABLEDEF=ZKAFKA -DKcopClass=com.datamirror.ts.target.publication.userexit.sample.kafka.KcopDefaultBehaviorSample -DKcopParameters=<server ip>

Kafka engine system parameters:

global_max_batch_size=100000

target_queue_max_unit_of_work=1024

target_queue_max_unit_of_work_refresh=1024

target_num_image_builder_threads=16

Average throughput per subscription is 63,973 rows per second (RPS).

Note : Throughput is recalculated after removing the performance statistics at the beginning and towards the end of the test because of the decrease in the number of concurrent transactions.

Summary

- The table row size greatly impacts CDC for Kafka performance. For a 35-column table, the throughput is 125k/s. For a table with 100+ columns, the throughput is 65K/s if using one subscriptions. If using two subscriptions, the replication engine can archive 96K/s (48K/s/sub). Using four subscriptions, it can archive 80K/s (20k/s/sub).

- For using KcopDefaultBehaviorSample, we didn’t observe any obvious performance downgrade.

- The target machine’s CPU, network, I/O etc. and the system parameter target_num_image_builder_threads greatly impacted target throughput.

- For the scenario with two subscriptions, from the bottleneck analysis, we suspect that faster hardware on the target machine could improve performance even further.

Source Hardware

10 cp

Target Hardware

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 80

On-line CPU(s) list: 0-79

Thread(s) per core: 2

Core(s) per socket: 10

Socket(s): 4

NUMA node(s): 4

Vendor ID: GenuineIntel

CPU family: 6

Model: 47

Model name: Intel(R) Xeon(R) CPU E7- 4850 @ 2.00GHz

Stepping: 2

CPU MHz: 1996.000

BogoMIPS: 3989.93

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 24576K

NUMA node0 CPU(s): 0-9,40-49

NUMA node1 CPU(s): 10-19,50-59

NUMA node2 CPU(s): 20-29,60-69

NUMA node3 CPU(s): 30-39,70-79

Memory:

KiB Mem : 49513830+total, 44100451+free, 49954388 used, 4179396 buff/cache

KiB Swap: 4194300 total, 4194300 free, 0 used. 44349008+avail Mem

Confluent version: confluent-4.0.0

CDC version:

$ ./dmshowversion

VERSION INFORMATION

Product: IBM Data Replication (Kafka)

Version: 11.4.0

Build: Release_5063

SYSTEM INFORMATION

Number of CPUs: 80

OS: Red Hat Enterprise Linux Server 7.2 (Maipo)

Ethernet controller: QLogic Corp. 10GbE Converged Network Adapter (TCP/IP Networking) (rev 02)

Target CDC instance memory: 30G

UID

ibm10876546