White Papers

Abstract

The accelerator needs transient (temporary) storage to write transient data during various tasks. ‘Transient’ means: this data will be deleted immediately after the task has finished. If the amount of transient data to be written is huge, the writing can lead to a performance impact of the executing task or to resource shortages of the environment. With Accelerator on Z maintenance level 7.5.12 (or later), there are options to configure dedicated storage for transient data.

This document describes these options in detail including the advantages of each option, sizing considerations, setup details, and operational aspects.

Content

- Writing temporary results of extensive sort operations during query processing that cannot be executed exclusively in the system memory.

- Writing replication spill queues when a replication-enabled table is loaded to the accelerator and at the same time new data changes on this table are replicated to the accelerator.

- Writing query results that tend to arrive faster than they can be picked up by the receiving client.

With Accelerator on Z maintenance level 7.5.12 or later, it is possible to define dedicated storage for transient data using one of the following options:

- Define a dedicated transient storage pool on external storage (Option A)

- Use local NVMe (non-volatile memory express) storage – LinuxONE Only- (Option B)

1.1 Define a transient storage pool on external storage (Option A)

1.2 Use local NVMe (non-volatile memory express) storage (Option B)

2 Advantages and recommendations for using dedicated storage for transient data

3 Configuring and using a transient storage pool on external storage (Option A)

3.1 Estimating the size of transient storage pool

3.2 Configuring a transient storage pool on external storage

3.3 Operational considerations when using a transient storage pool

4 Configuring and using local NVMe storage (Option B)

4.1 Estimating the size of local NVMe storage

4.2 Configuring local NVMe storage

1 Overview of dedicated storage options for transient data

- Define a dedicated transient storage pool on external storage (Option A)

- Use local NVMe (non-volatile memory express) storage – LinuxONE Only - (Option B)

Note, that you can only use one or the other option, but not a combination of both. If you defined both options then option B takes precedence over option A.

1.1 Define a transient storage pool on external storage (Option A)

A separate storage pool for transient data can be defined on FCP- or FICON-attached storage.

Note: Different storage pools can be deployed on different storage types. For example, the data pool could be deployed on FICON-attached storage and the transient pool on FCP-attached storage.

1.2 Use local NVMe (non-volatile memory express) storage (Option B)

- Is only available for accelerator deployments on LinuxONE machines.

- Is highly recommended for multi-node accelerator deployments. It should be configured directly during initial installation of each new multi-node accelerator.

- Became already available as technical preview with maintenance level 7.5.8 and is fully supported with maintenance level 7.5.12 or later.

2 Advantages and recommendations for using dedicated storage for transient data

- Separation of user data (loaded or replicated) from transient data

This allows more precise calculation of the storage needs of each pool, especially of the data pool. -

Improved query performance for queries that write transient data

This is achieved by using faster storage for transient data than for user data. -

Improved load and replication performance.

Loads, replication, queries running at the same time will perform better if their storage needs are separated from each other. While queries and replication will use transient storage as needed for their temporary files, loads will use the data pool storage. -

Improved stability

Transient data files can get very large, up to multiple Terrabyte (TB). A corresponding high temporary storage use can lead to an out-of-space condition in the data pool due. When using NVMe storage for transient data, this exceptional situation can be prevented. -

Reduced cost

In accelerator environments that have storage mirroring implemented (for example in active/passive accelerator environments) the storage for transient data can and should be excluded from any storage mirroring procedures. This reduces the amount of storage that needs to be mirrored and with that reduces costs.

- Protection of the storage system of the data pool (located on FCP- or ECKD-storage) from being utilized by heavy I/O activity

- I/O of transient data file of multiple TBs can take multiple minutes.

- Used storage bandwidth during this time might impact other systems using the same storage system.

- Further improved query performance (compared to transient storage on external storage)

First tests have shown an improvement of a high double digit percentage value compared to using FCP-attached FLASH storage

- Your accelerator is or will be installed on IBM zSystems (and not on LinuxONE) either as a single-node or multi-node deployment

- Your accelerator is a single-node deployment and you want to improve performance and stability further by isolating workloads and their storage usage.

- Your accelerator is a multi-node deployment on LinuxONE, but your workload does not require the additional advantages (listed above) that local NVMe storage provide.

- Your accelerator is a multi-node deployment on LinuxONE and your workload require the additional advantages (listed above) that local NVMe storage provide

- Note: for new multi-node deployments on LinuxONE it is highly recommended to use local NVMe storage already for the initial installation.

- Your accelerator is a single-node deployment on LinuxONE and you have complex queries with high temporary storage demands for that you want to improve the performance significantly

3 Configuring and using a transient storage pool on external storage (Option A)

- Estimating the size of a transient storage pool

- Configuring a transient storage pool on external storage

- Operational considerations when using a transient storage pool

3.1 Estimating the size of transient storage pool

3.1.1 Estimating the size for a new accelerator installation

3.1.2 Estimating the size for an existing accelerator installation

-

“temp_working_space”= <Fixed Size>

Use the specified amount in <Fixed Size>, e.g. 500 GB, as initial size of the transient storage pool. -

“temp_working_space”=”automatic”

This is the default setting used with 7.5.11 or later if temp_working_space is not explicitly set in the JSON configuration file. With this setting the initial size of the temporary tablespace in the data pool was the smaller of the following two values (80% of the LPAR memory, 50% of the free space of the data pool), but the accelerator might have reduced the size over time in case the data pool free space was low. To derive the initial size of the transient storage pool from this setting take one of the following options:-

Use 80% of the configured LPAR memory as the initial size per accelerator LPAR. The amount of configured LPAR memory can be determined from the LPAR configuration, e.g. in the HMC.

-

Open a support case with IBM support to determine the current size of the configured temporary tablespace and use this as the minimum initial size of the transient storage pool.

-

-

“temp_working_space”=”unlimited”

This is the default setting for Accelerator maintenance levels lower than 7.5.11 if temp_working_space is not explicitly set in the JSON configuration file. If your accelerator installation uses the “unlimited” setting (either as default setting or explicitly set), take one of the following options:-

Use 80% of the configured LPAR memory as the initial size per accelerator LPAR.

-

Open a support case with IBM support to monitor the temporary storage requirements of your workload and determine the initial size of the transient storage pool based on the results.

-

3.2 Configuring a transient storage pool on external storage

- FCP- or FICON-attached storage is required.

- The use of all-flash storage is strongly recommended.

- For FICON-attached storage devices the use of HyperPAV and zHPF is mandatory.

- For FCP-attached storage devices a connection to a Fibre Channel SAN using a switched fabric is required.

"transient_devices": {

"type": "dasd",

"devices": [

"0.0.9b12"

]

}

https://www.ibm.com/docs/en/daafz/7.5?topic=z-installing-starting-appliance-single-node-setup

https://www.ibm.com/docs/en/daafz/7.5?topic=z-installing-starting-appliance-multi-node-setup

If “transient_devices” and “transient_storage” are defined in the JSON configuration file, the setting of “transient_storage” takes precedence and “transient_devices” is ignored.

After you completed the changes in the JSON configuration file, upload it to the accelerator as described in this chapter: https://www.ibm.com/docs/en/daafz/7.5?topic=z-updating-existing-configuration

3.3 Operational considerations when using a transient storage pool

In addition, this chapter provides considerations for setting or unsetting the WLM threshold MAXTEMPSPACECONSUME in combination with a transient storage pool.

3.3.1 Monitoring transient storage pool usage

- The SMF counter Q8STTSA provides the current amount of disk space used by all paired Db2 subsystems for transient data. Note, that the provided value is not a high water mark, but a current value. Thus, a regular monitoring of this counter would be required to get a good understanding of the transient storage usage over time.

- With Accelerator maintenance level 7.5.12, the accelerator-internal monitoring has been improved to track the temp space usage high watermark in the accelerator trace file. Thus, IBM Support can help to determine the current high water mark from the trace file.

-

Increase the transient storage pool size

Only additional storage can be used to increase the transient storage pool. You cannot move storage from the data pool to the transient storage pool -

Set the Db2 Warehouse WLM threshold MAXTEMPSPACECONSUME

This threshold is used cancel queries automatically that require more transient storage than the configured threshold value. Open a support case with IBM support to set this threshold on the accelerator.

3.3.2 Considerations for setting or unsetting WLM threshold MAXTEMPSPACECONSUME

4 Configuring and using local NVMe storage (Option B)

- Estimating the size of local NVMe storage

- Configuring local NVMe storage

- Operational considerations when using local NVMe storage

4.1 Estimating the size of local NVMe storage

As a general sizing guideline use two NVMe cards (Samsung PM1733A) of 15 TB each per accelerator LPAR.

If local NVMe storage should be used for a single-node accelerator installation and the LPAR memory is < 4 TB, you should consider to use just one 15 TB NVMe card and one carrier.

If for any reason different NVMe card sizes (e.g. smaller ones) are under consideration, open a support case with IBM support to monitor the transient storage requirements of your workload and determine the required size based on the results. But note, that any other NVMe card with different size or from different vendor cannot be acquired from IBM. Only the mentioned 15 TB card from Samsung can be acquired from IBM.

4.2 Configuring local NVMe storage

- One of the following IBM LinuxONE systems:

- IBM LinuxONETM Emperor 4 (LA1 - 3931)

- IBM LinuxONETM Rockhopper 4 (LA2 and AGL - 3932)

- IBM LinuxONETM III LT1 (8561)

- IBM LinuxONETM III LT2 (8562)

- IBM Adapter for NVMe 1.1 with FC0448

- IBM part number: P/N 02WN273

- The number of adapters must be equal to the number of carriers calculated in the sizing process.

- NVMe card(s) “Samsung PM1733A MZWLR15THBLA-00A07 (15.36TB)”

- IBM part number: P/N 01CM547

- Contact your IBM zHW representative to acquire the cards from IBM

- The number of cards must be equal to the number of carriers calculated during sizing process.

- IBM provides support for this NVMe card and adapter FC0448 when used for an accelerator deployment. If a different NVMe card is used for your accelerator deployment, IBM only provides support for the adapter, but not for the NVMe card.

- For NVMe card specification see this Link

- Minimum MCL level S84a for z15 based LinuxONE

- Minimum MCL level S27 for z16 based LinuxONE

-

z15 based LinuxONE: https://www.ibm.com/docs/en/module_1687296212988/pdf/GC28-7002-00m.pdf

-

z16 based LinuxONE: https://www.ibm.com/docs/en/module_1676385617650/pdf/GC28-7035-00.pdf

For example:

"runtime_environments": [

{

"cpc_name": "Z16_4",

"lpar_name": "LPAR1",

"transient_storage": "NVMe",

"network_interfaces": [

...

https://www.ibm.com/docs/en/daafz/7.5?topic=z-installing-starting-appliance-single-node-setup

https://www.ibm.com/docs/en/daafz/7.5?topic=z-installing-starting-appliance-multi-node-setup

If “transient_devices” and “transient_storage” are defined in the JSON configuration file, the setting of “transient_storage” takes precedence and “transient_devices” is ignored.

After you completed the changes in the JSON configuration file, upload it to the accelerator as described in this chapter: https://www.ibm.com/docs/en/daafz/7.5?topic=z-updating-existing-configuration

4.3 Operational considerations when using local NVMe storage

In addition, this chapter provides considerations for setting or unsetting the WLM threshold MAXTEMPSPACECONSUME in combination with using local NVMe storage.

4.3.1 Monitoring the lifetime of a NVMe card

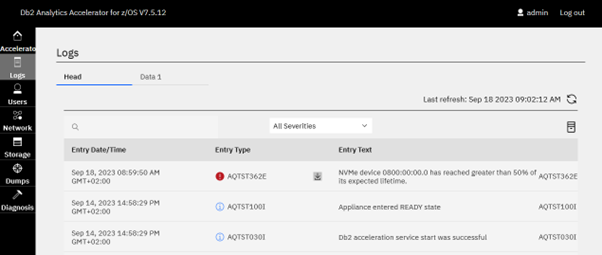

The following screenshot shows an example of this message:

4.3.2 NVMe card failures

- The accelerator will hang in the system state "starting". After several hours after a timeout is reached the system state becomes "failed".

-

In the Admin UI storage panel no storage information is displayed for the LPAR with the failed NVMe card or the storage panel is not shown at all. For other LPARs (in case of a multi-node deployment), the storage and NVMe card information is displayed correctly.

-

In the Admin UI logs panel no log messages are shown for the LPAR with failed NVMe card after messages related to network connection. For other LPARs (in case of a multi-node deployment), further log messages are written.

- Executing queries that write transient data are hanging, but they do not fail. Internally they are waiting (forever) for the transient storage to become available, therefore they do not fail.

- In the Admin UI no storage information is displayed for the LPAR with the failed NVMe card. The display hangs. For other LPARs (in case of a multi-node deployment) the storage and NVMe card information is displayed correctly.

- Change the JSON configuration file: remove the “transient_storage”:”NVMe” keyword for the LPAR with the failed card.

- Upload the JSON configuration file to the accelerator

- Reactivate the accelerator SSC LPARs

As a final resolution replace the failing NVMe card and reactivate the accelerator SSC LPARs afterwards. Don’t forget to revert any temporary changes in the JSON configuration file and upload it to use the NVMe local storage again for all LPARs.

4.3.3 Considerations for setting or unsetting WLM threshold MAXTEMPSPACECONSUME

Was this topic helpful?

Document Information

Modified date:

28 November 2024

UID

ibm17138629