Troubleshooting

Problem

When restoring a backup on the management subsystem that uses S3, it might fail, and the Management Cluster CR goes into warning status.

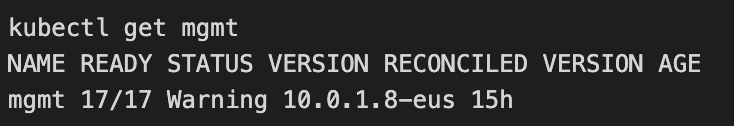

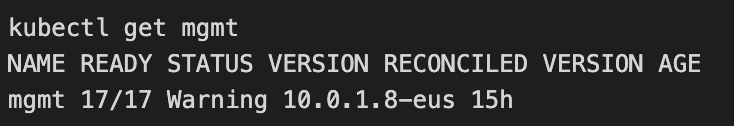

Observed behavior

Management CR shows wal is not working fails:

Resolving The Problem

This issue affects fix packs 10.0.1.8 and 10.0.5.1 only and is resolved by APAR LI82783. Users on the affected fix packs can do the following to work around this issue:

Workaround for Kubernetes, Red Hat OpenShift, and CP4I:

• If management subsystem is already in a state where management CR shows Warning and upgrade CR does not go to completion, delete the management CR or API Connect Cluster CR (if Red Hat OpenShift or CP4I) and its resources.

• Prepare for DR again and create necessary secrets.

• As part of DR preparation, connect the DR cluster to a brand-new bucket instead of the old cluster's bucket.

• Install the Management or API Connect cluster subsystem.

• The system is treated as a brand new system with custom encryption secret and application credentials and backups going to a different bucket where upgrade CR is marked completed already.

• Change the S3 configurations to the old cluster bucket.

• Wait for all the pods to go down and come up.

• Management CR is still stuck in Warning State that is expected and there is no lock issue because upgrade is already marked complete.

• Perform restore by using one of the mgmtb backups.

• Prepare for DR again and create necessary secrets.

• As part of DR preparation, connect the DR cluster to a brand-new bucket instead of the old cluster's bucket.

• Install the Management or API Connect cluster subsystem.

• The system is treated as a brand new system with custom encryption secret and application credentials and backups going to a different bucket where upgrade CR is marked completed already.

• Change the S3 configurations to the old cluster bucket.

• Wait for all the pods to go down and come up.

• Management CR is still stuck in Warning State that is expected and there is no lock issue because upgrade is already marked complete.

• Perform restore by using one of the mgmtb backups.

Workaround for OVA

- Delete the subsystem with:

kubectl delete -f /var/lib/apiconnect/subsystem/manifests/25.<subsystem name>-cr.yaml - Reapply the CR yaml with:

kubectl apply -f /var/lib/apiconnect/subsystem/manifests/25.<subsystem name>-cr.yaml - Alternatively you could also reinstall VMware

- Use v10 Disaster Recovery python script from Preparing the management subsystem for disaster recovery on VMware (10.0.1.2-eus) to prepare for Disaster Recovery.

- Use the new apicup binary to create and set the secrets copied from old cluster to the project directory.

- Change backup configuration to use new bucket

apicup subsys set <subsys name> database-backup-path=<new bucket path>- Follow steps in https://www.ibm.com/docs/en/api-connect/10.0.1.x?topic=connect-deploying-management-subsystem to complete the fresh installation with the new bucket path

- Change s3 configuration back to old bucket with:

apicup subsys set <subsys name> database-backup-path=<old bucket path>- Wait for all pods to go down and come back up

- Management subsystem is in Warning state so health-check fails and that is expected.

- Perform restore by using apicup restore command. For more information, see the IBM Documentation Deploying the Management subsystem

apicup subsys restore [subsystem_name] --name <backup_name> [flags] Related Information

Document Location

Worldwide

[{"Type":"MASTER","Line of Business":{"code":"LOB45","label":"Automation"},"Business Unit":{"code":"BU059","label":"IBM Software w\/o TPS"},"Product":{"code":"SSMNED","label":"IBM API Connect"},"ARM Category":[{"code":"a8m50000000CeBqAAK","label":"API Connect-\u003EManagement and Monitoring (MM)-\u003EBackup\/restore"}],"ARM Case Number":"TS010825023","Platform":[{"code":"PF025","label":"Platform Independent"}],"Version":"10.0.1;10.0.5"}]

Was this topic helpful?

Document Information

Modified date:

03 November 2022

UID

ibm16833910