The High Performance guys at IBM thought it would be good to add NVIDIA K80 GPUs stats to my nmon for Linux open source tool and who am I to complain about that? My new best friend :-) Manish Modani, gave me remote access to an internal machine in Austin, Texas from London over the IBM network and once the IBM firewall allowed me on the Austin lab network that I was logged in. Then, the fun started as I know nothing about these GPU devices. First, we came across a NVIDIA command that outputs many stats on the GPUs, which was a good start but this command has its quirks! The command is:

$ nvidia-smi --query

Which outputs a load of information about the four K80 GPUs. What! The S822LC can have a maximum of 2 NVIDIA Graphics cards inside the machine but apparently each graphics card has two GPUs making a total of four. The command outputs the following data for each GPU (only one included here for brevity). I removed some of the stats that I frankly have no idea what they mean and loads of ECC error counts that are all zero:

GPU 0002:04:00.0 Product Name : Tesla K80 Product Brand : Tesla Accounting Mode Buffer Size : 1920 Serial Number : XXXXXXXXXX GPU UUID : YYYYYYYYYYY Minor Number : 3 VBIOS Version : 80.21.1B.00.02 PCI GPU Link Info PCIe Generation Max : 3 Current : 3 Link Width Max : 16x Current : 16x Performance State : P0 FB Memory Usage Total : 11519 MiB Used : 11273 MiB Free : 246 MiB BAR1 Memory Usage Total : 16384 MiB Used : 8 MiB Free : 16376 MiB Compute Mode : Default Utilization Gpu : 100 % Memory : 37 % Encoder : 0 % Decoder : 0 % Temperature GPU Current Temp : 53 C GPU Shutdown Temp : 93 C GPU Slowdown Temp : 88 C Power Readings Power Management : Supported Power Draw : 144.53 W Power Limit : 149.00 W Default Power Limit : 149.00 W Enforced Power Limit : 149.00 W Min Power Limit : 100.00 W Max Power Limit : 175.00 W Clocks Graphics : 562 MHz SM : 562 MHz Memory : 2505 MHz Applications Clocks Graphics : 562 MHz Memory : 2505 MHz Default Applications Clocks Graphics : 562 MHz Memory : 2505 MHz Max Clocks Graphics : 875 MHz SM : 875 MHz Memory : 2505 MHz

Out of this, we selected the following stats of prime interest stats:

- GPU MHz - as this changes when the GPU gets busy

- GPU CPU - Utilisation percentage

- GPU Memory - Utilisation percentage

- Temperature in (we think) Centigrade as the graphic cards can get hot

- Electrical power used in Watts - related to the above

- Plus Driver Version and NVML Version (This is the NVIDIA Management Library)

Phase 1 - Collect the stats from the command using nmon feature: External Data Collector

I first implemented nmon capturing the data using the nmon external data collecting function. With this function you can get nmon to run a script or command at the start (to output the data description header lines) and at each snapshot event. Your scripts are responsible for getting the data and formatting it in suitable nmon "Comma Separated Value" format to a separate file. At the end of the data capture you then add a separate data file to the end of the nmon regular file for analyse. The advantage is any one can do this for whatever data you like. It also allows me to experiment with the data and format. This had the data collected for post run analyse in about an hour - once I got my "shell scripting and l hat" on

For the record, the gpu_start script to add the header lines looks like this

OUT=gpu.nmon echo GPU_UTIL,NVidia GPU Utilisation Percent,GPU1,GPU2,GPU3,GPU4 >$OUT echo GPU_MEM,NVidia Memory Utilisation Percent,GPU1,GPU2,GPU3,GPU4 >>$OUT echo GPU_TEMP,NVidia Temperature C,GPU1,GPU2,GPU3,GPU4 >>$OUT echo GPU_WATTS,NVidia Power Draw Watts,GPU1,GPU2,GPU3,GPU4 >>$OUT echo GPU_MHZ,NVidia GPU MHz,GPU1,GPU2,GPU3,GPU4 >>$OUT

Then the gpu_snap script to add the data lines looks like this: Note the $1 in the final sed adds the Snapshot number (in the format T0001 that is passed to the script so it matched up with the nmon collected data

OUT=gpu.nmon nvidia-smi --query-gpu=utilization.gpu,utilization.memory,temperature.gpu,power.draw,clocks.current.graphics --format=csv,noheader | \ sed 's/, / /g' | sed 's/ /,/g' | \ awk -F, '{ \ cpu[NR]=$1; \ mem[NR]=$3; \ temp[NR]=$5; \ watts[NR]=$6; \ mhz[NR]=$8; } \ END { \ printf "GPU_UTIL,TTTT,%d,%d,%d,%d\n", cpu[1], cpu[2], cpu[3], cpu[4] ; \ printf "GPU_MEM,TTTT,%d,%d,%d,%d\n", mem[1], mem[2], mem[3], mem[4] ; \ printf "GPU_TEMP,TTTT,%d,%d,%d,%d\n", temp[1], temp[2], temp[3], temp[4] ; \ printf "GPU_WATTS,TTTT,%d,%d,%d,%d\n", watts[1],watts[2],watts[3],watts[4] ; \ printf "GPU_MHZ,TTTT,%d,%d,%d,%d\n", mhz[1], mhz[2], mhz[3], mhz[4] ; \ }' | \ sed s/TTTT/$1/ >>$OUT

Then define the two shell variables for nmon to pick up when it starts:

export NMONSTART=gpu_start export NMONSNAP=gpu_snap

Next run nmon in Data Capture mode (-f), wait for it to complete and cat the gpu.nmon file to the end of the regular nmon output file.

The gpu.nmon file looks like this (but, of course, a lot longer but it gives you the idea):

GPU_UTIL,NVidia GPU Utilisation Percent,GPU1,GPU2,GPU3,GPU4 GPU_MEM,NVidia Memory Utilisation Percent,GPU1,GPU2,GPU3,GPU4 GPU_TEMP,NVidia Temperature C,GPU1,GPU2,GPU3,GPU4 GPU_WATTS,NVidia Power Draw Watts,GPU1,GPU2,GPU3,GPU4 GPU_MHZ,NVidia GPU MHz,GPU1,GPU2,GPU3,GPU4 GPU_UTIL,T0001,0,0,0,0 GPU_MEM,T0001,0,0,0,0 GPU_TEMP,T0001,47,42,47,41 GPU_WATTS,T0001,58,69,62,73 GPU_MHZ,T0001,324,562,562,562 GPU_UTIL,T0002,0,0,0,0 GPU_MEM,T0002,0,0,0,0 GPU_TEMP,T0002,47,42,47,41 GPU_WATTS,T0002,28,27,62,73 GPU_MHZ,T0002,324,324,562,562

This is automatically graphed but the nmon Analyser but other down stream tools would need updating to graph the new GPU data.

Phase 2 - Direct support via the NVIDIA C API coded into nmon

As I knew what I wanted I knocked up a small test program that gets the data via the NVML library function calls. Once that worked, I added the GPU stats code to nmon itself so it calls the NVIDIA Management Library directly to get the same data.

To be blunt this was pretty complex as I suspect the NVML library was not fully installed or a work-in-progress beta version. I could not find a C header file that matched the documentation and it was equally hard to find were the API library was installed. However, I worked around these problems and got it running. Found a problem, if the library is initialised and the process uses the fork() system call then NVML library only returns errors from then on. And guess what? nmon does just this as in data collection mode it has to break the controlling terminal relationship to prevent nmon stopping, if you log out. I have to change the code to postpone the initialisation until after the fork().

Now we have to compile the new version of nmon with NVIDIA GPU support by adding to the makefile a definition to include the new code and linking in the MVML library. I included in the nmon code enough data and function definitions from the documentation so I did not need the NVML header file that I could not find. Not a neat solution but it works. Here is the makefile addition:

nmon_power_ubuntu140403_gpu: $(FILE) gcc -o nmon_power_ubuntu140403_gpu $(FILE) $(CFLAGS) $(LDFLAGS) \ -D POWER -D KERNEL_2_6_18 \ -D NVIDIA_GPU /usr/lib/nvidia-352/libnvidia-ml.so

- Note: This was developed on Ubuntu 14.04.03 - we need to test this on RHEL 7.2, which is also supported on the S822LC machine with NVIDIA GPU accelerators.

- Note: The extra code and library mean that nmon binary compiled like this cannot run on a machine where there is no library. It will fail with a "missing library" message at start-up.

The Data Capture mode output is straight into the regular nmon file = No messing about with the NMONSTART and NMONSNAP shell variables and scripts or merging data files. As I used the External Data Collector as the example the output is exactly the same format.

On-screen works nicely too. We had a debate about a suitable letter online to toggle the display the GPU stats. Unfortunately, "g" and "G" and "n" and "N" (I was thinking GPU or NVIDIA) are already used but most thought "a" for Accelerator was a good choice.

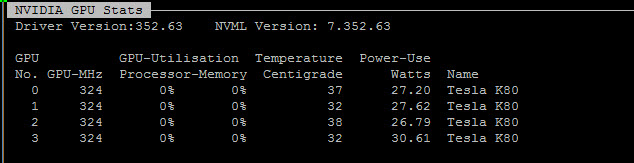

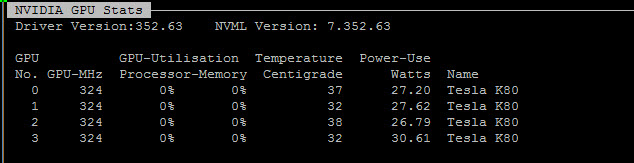

So what does it look like on-screen:

And then when running under heavy load it looks like this:

Notes:

- When the HPC workload stopped it was interesting watching the temperatures go down 1 degree C every few of seconds till it reached the lower temperatures again.

- The GPU certainly use a lot of electrical power - in this case about 550 Watts - that can change depending on the nature of the calculations and parallel maths it is running.

- The CPUs were ~100% but there was space GPU memory for more optimisation.

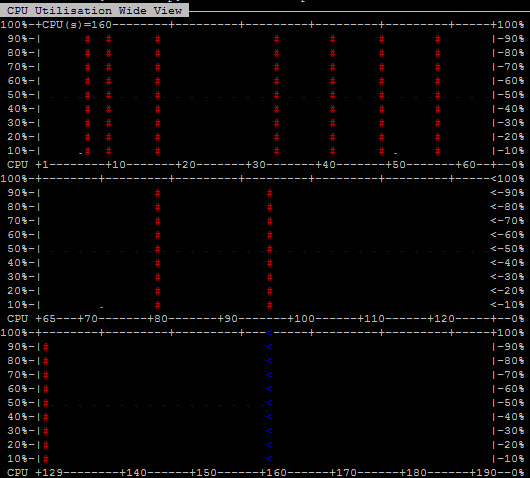

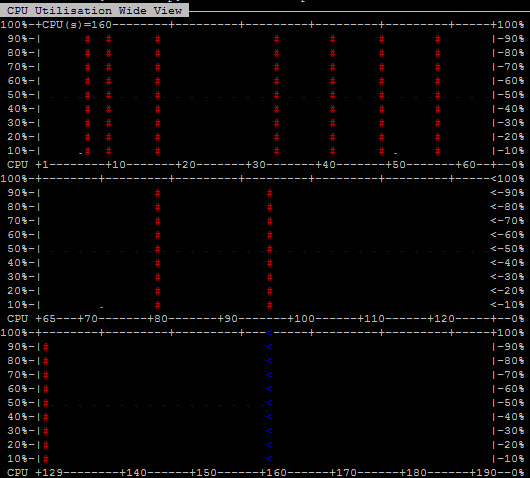

Other features of the new nmon version 16a are the Compact or wide CPU view. Below is the 160 logical CPUs = threads on this S822LC machine. This will allow monitoring of all 160 CPU threads at the same time as the GPUs - not many of us have a screen that is capable of showing 160+ lines of output. The S822LC, in this case, machine has 20 POWER8 Cores spread across 2 POWER8 chips (10 Cores per POWER8 chip) and the default is 8 threads per core switched on. So 20 cores and SMT=8 = 160 concurrent SMT Threads:

Above, I just started two handfuls of "yes" processes and we can see the Linux Kernel rightly running on "yes" on each core - they do over time wonder around a little. We have seen this optimisation on AIX for years and its good to check Linux matches it.

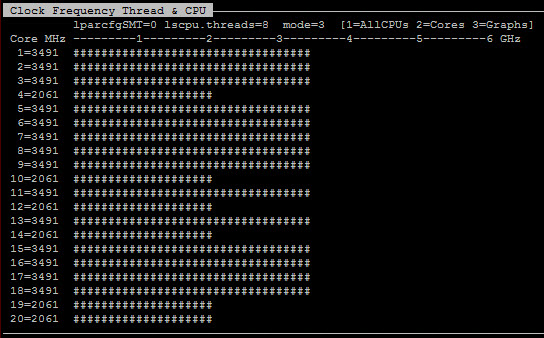

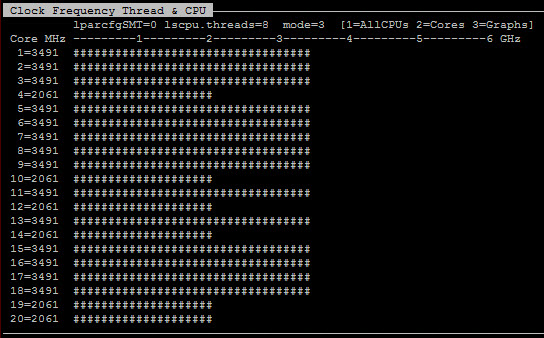

Below we noticed that the GHz of the main POWER8 CPUs is also varying over time - I first noticed that on my S812LC. The picture was captured when only some of the CPUs are busy. The Idle POWER8 Cores are running at ~ 2GHz and the busy POWER8 Cores are running at ~3.5GHz

It is a great day to be monitoring POWER8 based machines with NVIDIA GPU turbo chargers.

Update

Since this article I have release nmon 16 to http://nmon.sourceforge.net

If you give it a try - please give me feedback both the bad news and the "It works" good news.

Will do my best to fix problems quickly.

The on-screen numbers are in colour now too:

- - -The End - - -