AIX 7, VIOS and SSP - SSD/Flash Cache Best Practice

Is it worth implementing Flash Cache and what difference does it make? A question that is often asked and the answers that spring to mind are:

- Ha ha ha ha ha ha! Oh, you are serious!

- Consultant standard answer number 1: "It depends"

- Hmm! Tricky!

I guess the problem is: The questioner is really wanting a prediction of future performance and is it going to be cost-effective. And these questions have no answer.

If you look the topic up on Google, then your find hits on the Official Announcement and a few cut'n'pastes of them, which amounts to a paragraph only. The following section is my collection of information that might help you.

If you come across other information and recommendations, then email me (see the end of this web page) so that we all benefit.

Best Practice

- Don't try Flash cache on a small virtual machine

If you have say, 1 or 2 CPU, 4 GB of memory and 20 GB of disks you get instant performance gains by adding memory and caching it that way. Simple to try and low risk. No need for SSD/Flash. Relatively not too expensive.

- Have larger warm disk data than you can have RAM

If you have more data in regular I/O (not archive coldly on the disk) than you could possibly afford in memory, then the SSD/Flash cache can boost data access that is, multiple TB of warm data.

- Don't Cache a Flash

If you are already using SAN-based Flash for your data, then the SSD/Flash cache is unlikely to help further - IMHO.

- Add 4 GB extra RAM.

- The cache management algorithm needs to keep a history of read access and then need memory - don't starve your Kernel.

The documents state, 4 GB minimum virtual machine size = that would be bonkers!

That 4 GB minimum size means the developers did not test this feature with tiny virtual machines. My Laptop here has 16 GB RAM!

- No TIPping = no Testing In Production

Test it before production use with a reproducible workload (benchmark) so you know with certainty the performance gain.

- Have your SSD Setup for high performance.

Don't ass the SSD (or Flash) on an adapter that is already swamped with I/O - If the cache is not super fast there is not much point.

- There are unexpected limitations on the numbers of disks and cache - I hoped the limitations are reduced in later releases.

Only one cache pool.

Only one cache partition.

The command syntax suggests more could be possible but in practice, it gives you an error.

- Don't ask for a prediction of future performance levels.

- Cache Size recommendation - ha ha ha ha ha ha ha ha!

This setting creates a read cache.

You can imagine how that operates as well as me.

It is unlikely you can measure how often your workloads reread disk blocks nor the total size of the data that has been reread.

If you can, then you know how much disk I/O speeds improve.

- Example from Jaqui Lynch (friendly performance guru that sparked of the need for this blog). Jaqui is thinking off using four SSD's (700 GB each) with 20 TB of data on the file systems (unknown reread rate or set size).

Cache to data ratio = 14% (4 x 700)/20,000*100

My guess It is healthy and they have a good chance of a large performance gain.

If the ratio is a handful of percent it still might be OK, if the working set is a lot less than the full 20 TB.

For example, one SSD at 700 GB = 2.5% but the hot part of the data used in any one days is 5 TB then we get back to 14%.

- The IBM Docs manual pages are here: https://www.ibm.com/docs/en/aix/7.1?topic=management-caching-storage-data

The cache_mgt AIX/VIOS command can be found here: https://www.ibm.com/docs/en/aix/7.2?topic=c-cache-mgt-command

Oldest AIX versions supported

- AIX 7.1 TL4 SP2,

AIX 7.2 TL0 SP0

Have the most up to date possible AIX and the latest firmware and VIOS and HMC = good practice for best performance.

- SAN Off load

If your SAN is already busy, you get the double win:win - the cache gives a performance boost AND less pressure on the SAN, which means the noncache disk I/O goes faster.

- RAS: Cache Failure

Some people are concerned that the SSD/Flash is not redundant and AIX does not mirror the cache to two devices to handle the case of a device failure.

This statement is true. Think of the cache as a turbocharger and the server sized to survive the workload without the cache.

One option is to provide hardware-based mirroring or RAID5 in the SAS RAID adapter (assuming that is in the configuration). Feedback is welcome.

The Cache is read-only, which means it can instantly be switched off on your command or a problem. The next disk read I/O goes back to the real disks, which are always the primary copy. The cache is never used to stage a write I/O - so there is 0% flushing the cache issues. The old copy in the cache is invalidated and the I/O goes to the disks. This option makes an instant cache stop 100% safe.

- RAS Cache Off line

Design your server to provide a satisfactory service without the Cache. Think of a car with a turbocharger. When the Turbo is working, I can cruise along at 150 MPH (ignoring any legal issues). If the Turbo stops, then I am reduced to 70 MPH but I can still get hoe at the end of the day. The Turbo is a "great to have" feature but I can survive without it.

- RAS Cache Redundancy

I have system designers saying that the Cache must be available 100% of the time or they are not going to meet their Service Level Agreements so they demand it is fully redundant and IBM must address this issue immediately. All I can suggest is they call their IBM representative and complete a Request for Enhancement (RFE) we need client demand to make this high priority.

- RAS: Machine Evacuation - LPM is IMHO Mandatory

You can use Live Partition Mobility only if you are using the virtual I/O on the VIOS to the SSD/Flash.

If you have direct SSD/Flash access on a physical adapter LPM is not available, obviously. But you could in an emergency switch off the cache by removing the Cache Devices from AIX, removing the adapter from the LPAR and then use LPM. But then you have no cache unless you have the same hardware on the LPM target machine.

- VIOS-based Cache

Good news:Flash cache allows LPM (by cache removal) but the bad news it is slower due to the virtual I/O layer between VM and VIOS (although that is in normal daily use on most servers). I have no indicative numbers. So don't ask.

- RAS VIOS-based Cache

The cache is on one VIOS. There is no dual path to an SSD on one VIOS. When using the SSD Cache on the VIOS and you have to shut down that VIOS, the cache is switched off.

- As the cache is mostly read I/O, it does not benefit much from SSD attached by SAS adapters with write cache.

- On Scale-out POWER8 servers, you can use the SSD slots in the System Unit or placed in a EXP24S Drawer.

- As the AIX SSD/Flash cache algorithm decides what to cache based on history, it takes time to select the blocks. From my simple tests, this required 5 or more minutes as the performance accelerates. With larger, complex and changing over time of day disk I/O patterns, for example, RDBMS access patterns let i run for an hour. Especially, for larger caches it could take 2 hours or even over 24 hours, when you have different workloads throughout the day like morning analysis, afternoon order processing and various batch runs overnight.

At the Power Technical University at Rome Nicolas Sapin, Oracle & AIX IT specialist, IBM presented his results of AIX Caching with an Oracle RDBMS the team achieved well over 3 times the transaction rate of SQL statements taking a quarter of the time. The team pointed out that at around 30%+ the AIX cache was approaching the performance of the whole database on a Flash Disk Unit - which costs more. Note: that the hot data was roughly 30% of the database. Sessions and shared experience like this are typical of the Technical Universities - Don't miss them.

My thanks to the various AIX Performance developers and designers for taking questions, delivering presentation, and testing support:

- You know who you are and they are typically reluctant to be named publicly.

Flash Cache Statistics documentation

Indirectly talking to the developers and they point out the Flash Cache algorithm is similar to the algorithms used in IBM Easy Tier.

Here is a draft version pending it being added to the IBM Docs

cache_mgt monitor get -hs ETS Device I/O Statistics -- hdisk1

Start time of Statistics -- Mon Mar 27 07:10:41 2017

-----------------------------------------------------

Read Count: 152125803

Write Count: 79353626

Read Hit Count: 871

Partial Read Hit Count: 63

Read Bytes Xfer: 10963365477376

Write Bytes Xfer: 4506245999616

Read Hit Bytes Xfer: 48398336

Partial Read Hit Bytes Xfer: 5768192

Promote Read Count: 2033078104

Promote Read Bytes Xfer: 532959226494976

Explanation of fields with "cache_mgt monitor get":

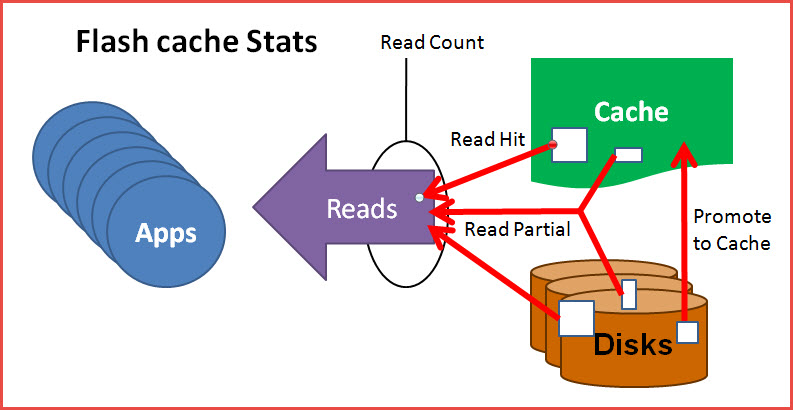

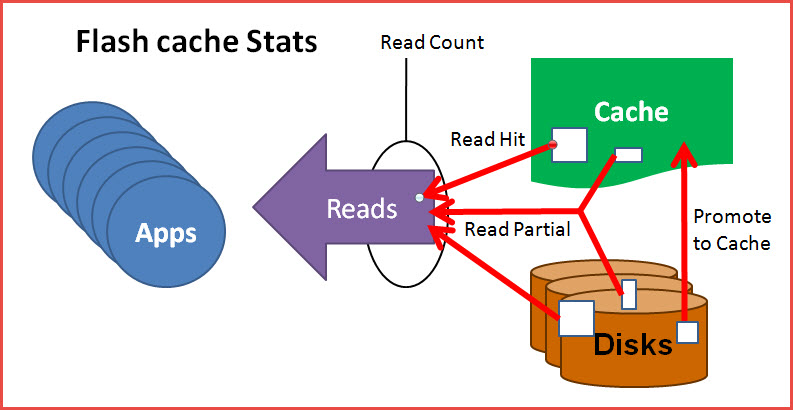

Read Count The total number of read operations that were issued to the target device.

It is the count for all applications that issued read commands that were sent to the SAN

device or to cache. This number has no relation to the size of the requests.

It is the count of separate read requests.

Read Hit Count The total number of read operations that were issued to the target device

that are full cache read hits. The read hit count is the total number of instances in which

a read request is satisfied entirely by the cache. This number has no relation to the size of the requests.

It is the actual count of separate read hit requests, and the value is a portion of the "Read Count."

Partial Read Hit Count The total number of read operations that were issued, which are

partial cache read hits. The partial read hit count is the total number of instances in

which a read request had part, but not all, of the data requested in the cache.

The remainder of the data not available in cache must be acquired from the SAN device.

This number has no relation to the size of the requests. It is the actual count of separate

read requests, and the value is a portion of the "Read Count."

Promote Read Count

This counter is the total number of read commands that were issued to the SAN as part of the promote into cache. This number is not tied to the number of promotes because a 1 MB read promote might be divided into multiple read requests, if the maximum transfer size to the SAN disk is less than the 1 MB fragment size.

Read Bytes Xfer

The total number of bytes that were read for the target device. It is the total bytes transferred for read commands that were issued from the applications into the driver, and represents the total byte count of read hits, partial read hits, and read misses.

Read Hit Bytes Xfer

The total number of bytes that were read through the target device that were full cache read hits.

Partial Read Hit Bytes Xfer

The total number of bytes that were read through the target device that were partial cache read hits.

Promote Read Bytes Xfer

This statistic is the total number of bytes that were read from the SAN for promotes.

Write Count

The total number of write operations that were issued to the target device. This number has no relation to the size of the requests. It is the actual count of separate write requests.

Write bytes Xfer

The total number of bytes that were written to the target device. It is the total number of bytes transferred from all write commands that were issued from the applications into the device.

This diagram might be helpful:

Finally:

- If you are giving AIX 7 SSD/Flash Cache a try, let me know how it went.

- Also, the basic configuration, a summary of the workload type and the results of your tests.

What more Information?

1) IBM Docs:

2) Jaqui Lynch Articles in IBM Magazine