AI Explainability 360 is a comprehensive toolkit that offers a unified API to bring together:

- state-of-the-art algorithms that help people understand how machine learning makes predictions

- guides, tutorials, and demos together in one interface

The initial release of AI Explainability 360 contains eight different algorithms, created by IBM Research. We invite the broader research community to contribute their own algorithms.

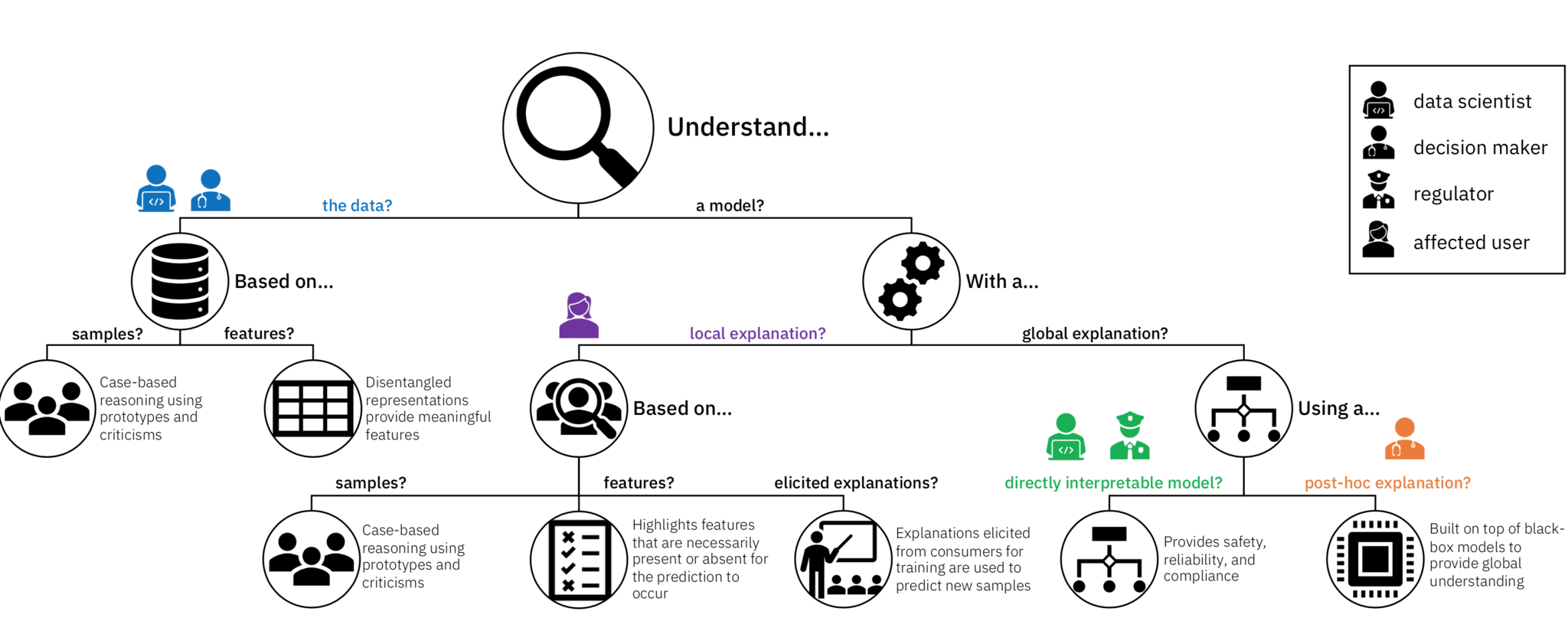

Algorithms include methods for understanding both data and models. Some algorithms directly learn models that people can interpret and understand. Other algorithms first train an inscrutable black box model and then explain it afterwards with another model. Some algorithms explain decisions on individual samples; whereas, other algorithms explain the entire model at the same time.

Why AI Explainability 360?

Black box machine learning models that cannot be understood by people — such as deep neural networks and large ensembles — are achieving impressive accuracy on various tasks and gaining widespread adoption. As they grow in popularity, explainability and interpretability, which permit human understanding of the machine’s decision-making process, are becoming more essential. In fact, according to an IBM Institute of Business Value survey, 68% of the gobal executives surveyed believe that customers will demand more explainability in the next three years.

Explainability is not a singular approach. There are many ways to explain how machine learning makes predictions, including:

- data vs. model

- directly interpretable vs. post hoc explanation

- local vs. global

- static vs. interactive

The appropriate choice depends on the persona of the consumer and the requirements of the machine learning pipeline. AI Explainability 360 differs from other open source explainability offerings through the diversity of its methods, focus on educating a variety of stakeholders, and extensibility via a common framework.

Example of two algorithms

Let’s look at two algorithms as an example.

- The Boolean Classification Rules via Column Generation is an accurate, scalable method of directly interpretable machine learning that won the inaugural FICO Explainable Machine Learning Challenge.

- The Contrastive Explanations Method is a local post hoc method that addresses the most important consideration of explainable AI that has been overlooked by researchers and practitioners: explaining why a certain event happened instead of some other event.

The AI Explainability 360 toolkit interactive experience provides a gentle introduction to fairness concepts and capabilities. The tutorials and other notebooks offer a deeper, data scientist-oriented introduction. The complete API documentation is also available. As a comprehensive set of capabilities, it may be confusing to figure out which algorithms are most appropriate for a given use case. To help, we created some guidance material that you can consult.

Why should I contribute?

As an open source project, the AI Explainability 360 toolkit benefits from a vibrant ecosystem of contributors, both from the technology industry and academia.

This is the first explainability toolbox that gives you a unified API coupled with industry-relevant policy specifications and tutorials to tackle all the different methods of explaining. Bringing together the top explainability algorithms and metrics in the field will help accelerate both the scientific advancement of the field and adoption of the techniques in real-world deployments.

We encourage you to contribute your metrics and algorithms. Please join the community and get started as a contributor. Read our contribution guidelines to get started.