The Adversarial Robustness 360 Toolbox (ART), an open source software library, supports both researchers and developers in defending deep neural networks against adversarial attacks, making AI systems more secure. Its purpose is to allow rapid crafting and analysis of attack and defense methods for machine learning models.

The Adversarial Robustness 360 Toolbox provides an implementation for many state-of-the-art methods for attacking and defending classifiers. It is designed to support researchers and AI developers in creating novel defense techniques and in deploying practical defenses of real-world AI systems. For AI developers, the library provides interfaces that support the composition of comprehensive defense systems using individual methods as building blocks.

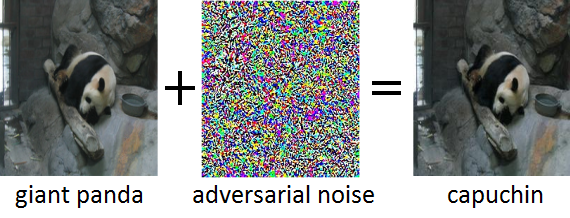

The adversarial example on the right was obtained by adding adversarial noise (middle) to a clean input image (left). While the added noise in the adversarial example is undetectable to a human, it leads the deep neural network to misclassify the image as “capuchin” instead of “giant panda.”

ART is being developed to support multiple frameworks. This first release supports deep neural networks (DNN) implemented in the TensorFlow and Keras deep learning frameworks. Future releases will extend the support to other popular frameworks such as PyTorch or MXNet.

Why ART?

Adversarial examples pose an asymmetrical challenge with respect to attackers and defenders. An attacker aims to get his reward from a successful attack without raising suspicion. A defender, however, wants to develop strategies that can guard their models against all known attacks and ideally for all possible inputs.

The ART toolbox is developed with the goal of helping developers better understand:

- Measuring model robustness

- Model hardening

- Runtime detection

Why should I contribute?

As an open source project, the goal of the Adversarial Robustness 360 Toolbox is to create a vibrant ecosystem of contributors both from industry and academia. The main difference to similar ongoing efforts is the focus on defense methods and on the composability of practical defense systems. We hope the Adversarial Robustness 360 Toolbox project stimulates research and development around adversarial robustness of DNNs, and advance the deployment of secure AI in real-world applications. Please share with us your experience working with the Adversarial Robustness 360 Toolbox as well as any suggestions for future enhancements.