Installing on Airflow cluster

To provide observability over your Airflow DAGs, Databand integrates with different Apache Airflow deployment types. For the steps specific to your type of Airflow deployment, use one of the following links:

- Integrating standard Apache Airflow cluster

- Integrating Astronomer

- Integrating Amazon Managed Workflows Airflow

- Integrating Google Cloud Composer

Before you proceed with the integration, make sure you have network connectivity between Apache Airflow and Databand (from Apache Airflow to the Databand Server).

Standard Apache Airflow cluster

Installing new Python packages on managed Airflow environments triggers an automatic restart of the Airflow scheduler.

Follow the Installing Python SDK manual to install the Databand dbnd-airflow-auto-tracking Python package into your cluster's Python environment. To avoid being automatically redirected

to a different version of the Python package in case of its updates, pin the version of the Python package by running the following command:

pip install dbnd-airflow-auto-tracking==<version number>

Check the Python repository for the most recent version of the Python package.

You might have to change your Dockerfile or requirements.txt.

Airflow 2.0+ support

For Databand tracking to work properly with Airflow 2.0+ you need to disable lazily loaded plug-ins. To do so, change core.lazy_load_plugins=False configuration setting through setting the environment variable AIRFLOW__CORE__LAZY_LOAD_PLUGINS=False.

For more information, see the Airflow's plug-ins documentation website.

Airflow 2.1.0, 2.1.1, 2.1.2 - Airflow HTTP communication

If you use Airflow 2.1.0, 2.1.1, or 2.1.2, verify that the apache-airflow-providers-http package is installed, or consider upgrading your Airflow.

Astronomer

With Astronomer you can build, run, and manage data pipelines-as-code at enterprise scale.

You can install the dbnd-airflow-auto-tracking library by customizing the Astronomer Docker image, rebuilding it, and deploying it. Check the manual for more details

on how to do that.

In your Astronomer folder, add the following line to your requirements.txt file:

dbnd-airflow-auto-tracking==REPLACE_WITH_DATABAND_VERSION

Redeploying the Airflow image triggers a restart of your Airflow scheduler.

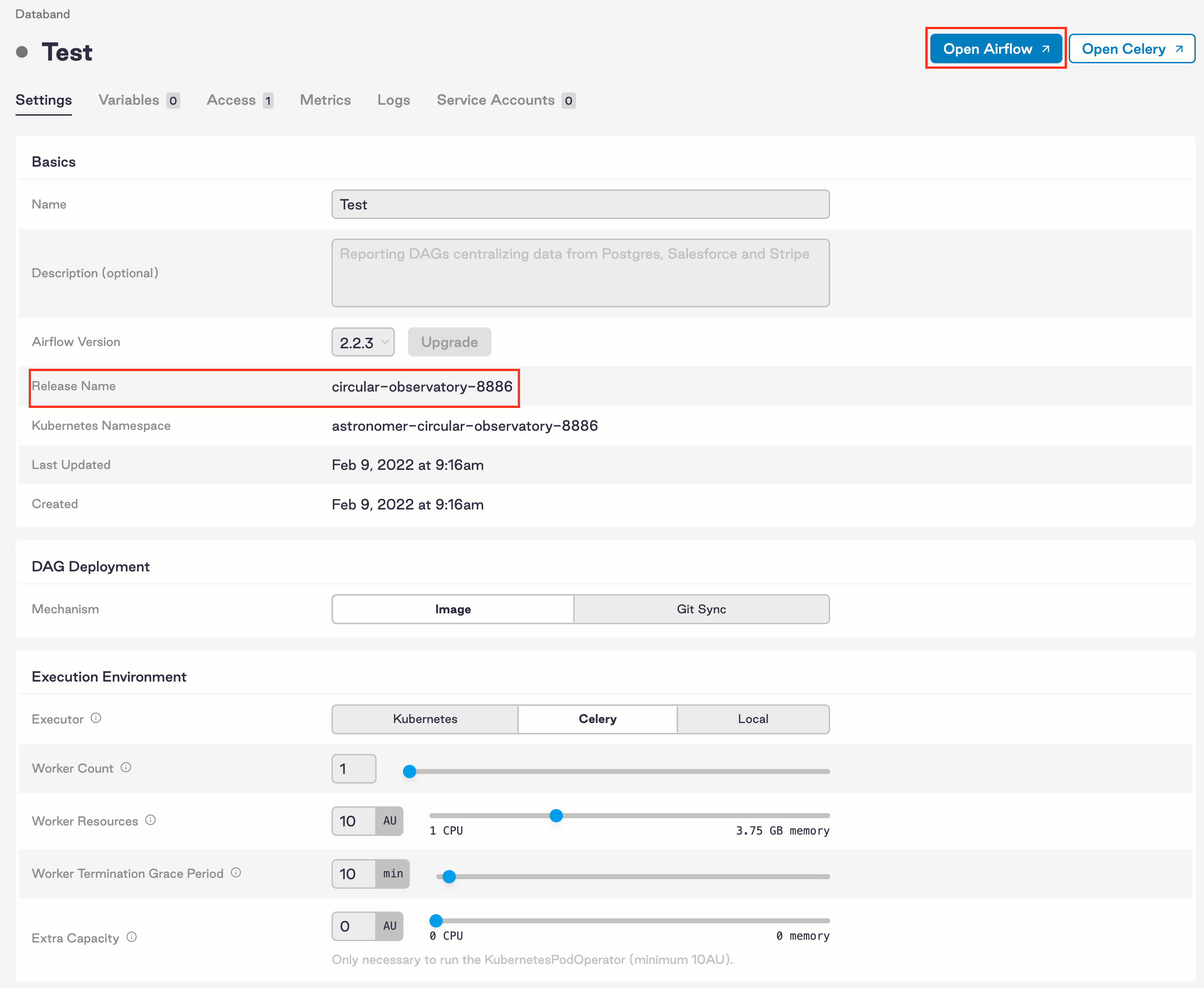

Astronomer Airflow URL

To get the Airflow URL:

- Go to the Astronomer control page and select the Airflow deployment.

- Click Open Airflow and copy the URL without the

/homesuffix. The URL is provided in the following format:

http://deployments.{your_domain}.com/{deployment-name}/airflow.

The Astronomer UI shows your {deployment-name} as Release Name.

When you create a Databand Airflow integration for Airflow deployed on Astronomer:

When you create a Databand Airflow integration for Airflow deployed on Astronomer:

Amazon Managed Workflows

Amazon Managed Workflows is a managed Apache Airflow service that enables setting up and operating end-to-end data pipelines in the AWS cloud at scale. To integrate with AWS:

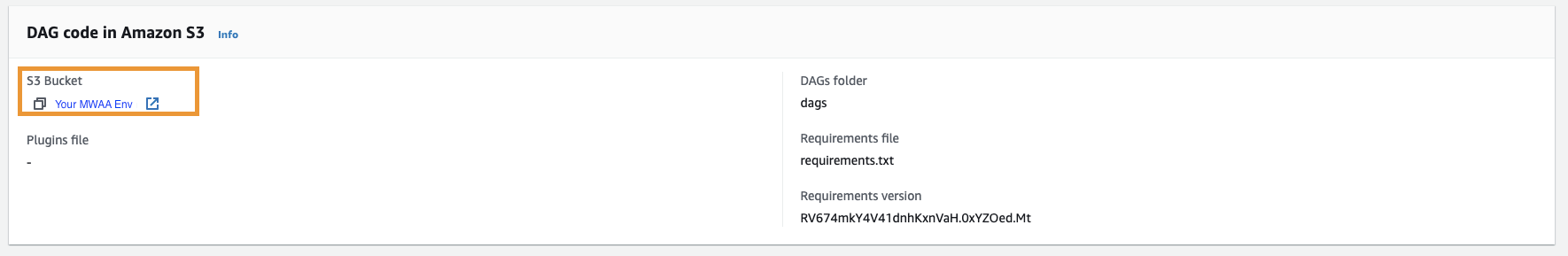

- Go to AWS MWAA > Environments > {mwaa_env_name} > DAG code in Amazon S3 > S3 Bucket:

- In MWAA’s S3 bucket, update your

requirements.txtfile. - Install the package by entering the following code:

dbnd-airflow-auto-tracking==REPLACE_WITH_DATABAND_VERSION

- Update the

requirements.txtversion in the MWAA environment configuration. Check the table to see the list of supported platform versions.

Table 1. A list of supported platform versions for an integration with AWS

| Platfrom | Supported version | Notes |

|---|---|---|

| Apache Airflow | 1.10.15 | Supported only with Python 3.7 |

| Apache Airflow | 2.2.x to 2.7.x |

Saving this change to your MWAA environment configuration triggers a restart of your Airflow scheduler.

For more information on integration, see Installing Python dependencies - Amazon Managed Workflows for Apache Airflow. For Databand installation details, check Installing DBND.

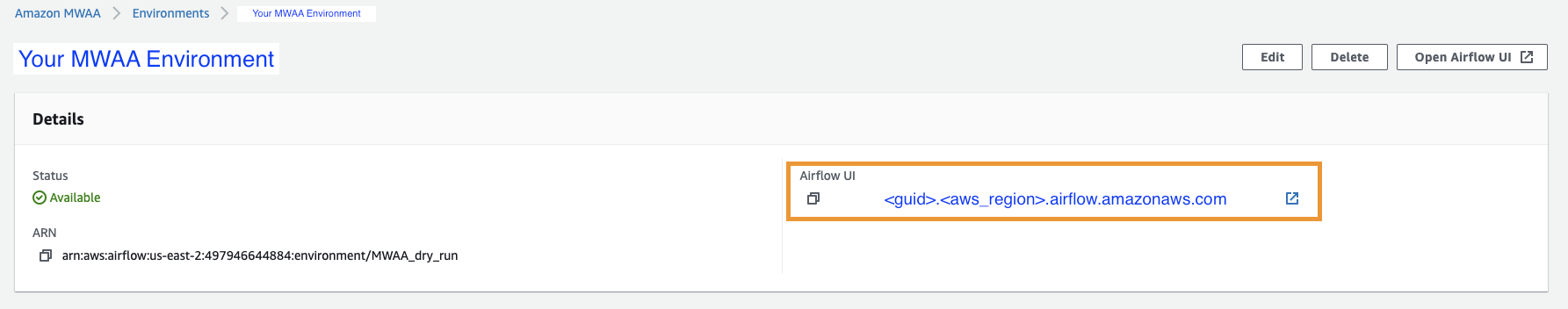

MWAA URL

The Airflow URL can be located in the AWS Console. To get the URL, go to AWS MWAA > Environments > {mwaa_env_name} > Details > Airflow UI.

The URL is provided in the following format:

https://<guid>.<aws_region>.airflow.amazonaws.com

Google Cloud Composer

With Google Cloud Composer, which is a fully managed data workflow orchestration service, you can author, schedule, and monitor pipelines.

Before you integrate Cloud Composer with Databand, make sure you have your Cloud Composer URL.

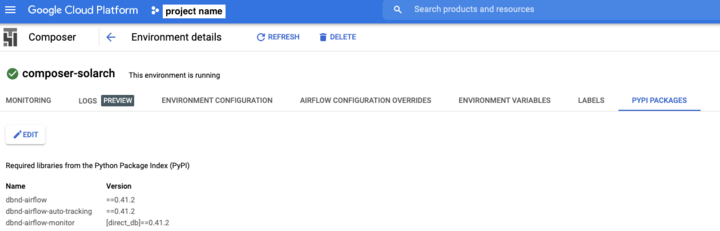

To integrate with Google Cloud Composer:

- Update your Cloud Composer environment's PyPI package with the

REPLACE_WITH_DBND_VERSIONentry:

dbnd-airflow-auto-tracking==REPLACE_WITH_DBND_VERSION

- Provide the Databand version that you're using (for example, 0.61.1) instead of

REPLACE_WITH_DBND_VERSION.

A line of code for such a version would look like the following:

dbnd-airflow-auto-tracking==0.61.0

See Installing DBND for more details. Saving this change to your Cloud Composer environment configuration triggers a restart of your Airflow scheduler.

For more information, see Installing a Python dependency from PyPI.

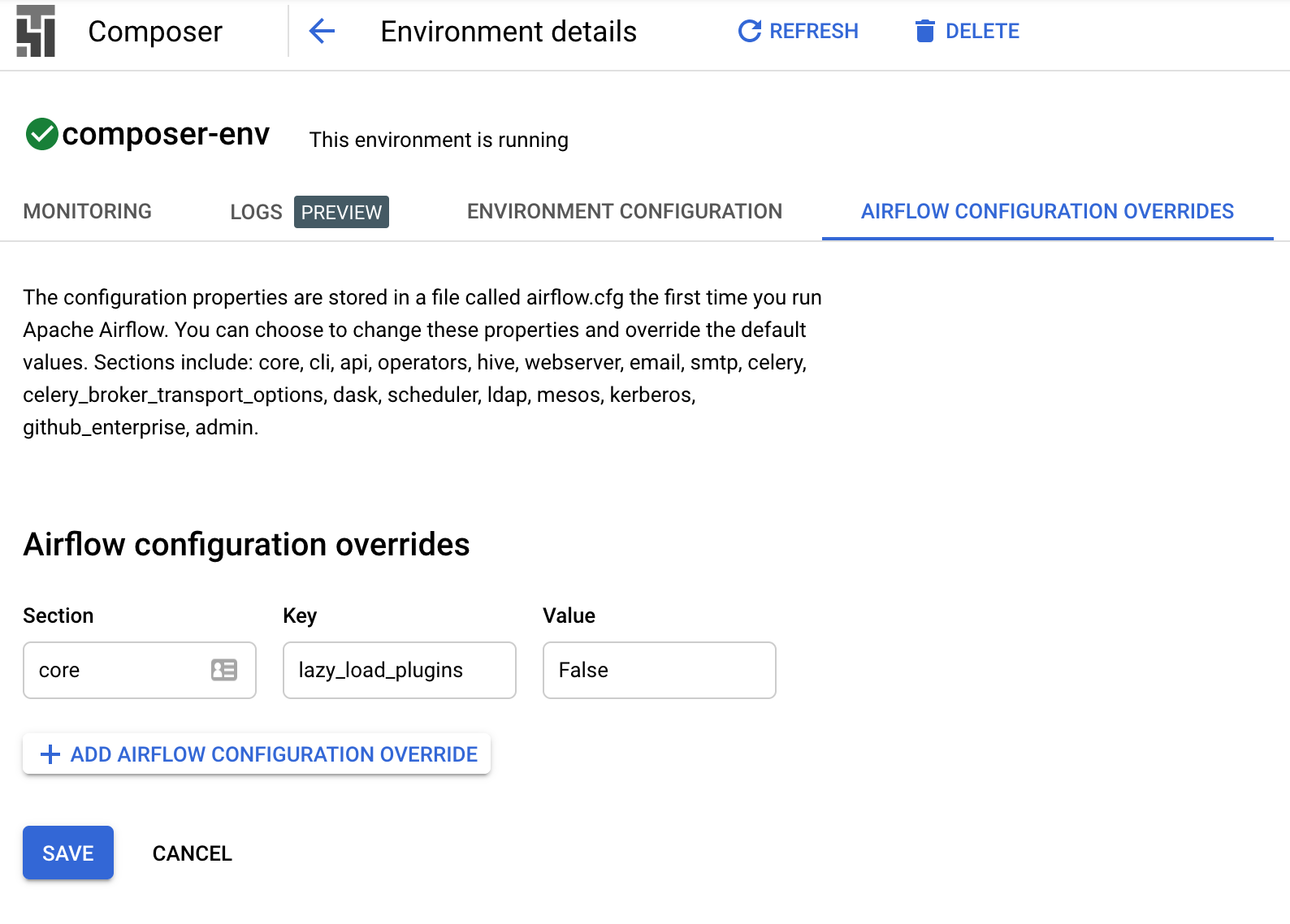

For Databand tracking to work properly with Airflow 2.0+, you need to disable lazily loaded plug-ins. To disable lazily loaded plug-ins, use the configuration setting: core.lazy_load_plugins=False.

You can also disable lazily loaded plug-ins in Google Cloud Composer. Look at the following screenshot for more details:

Cloud Composer URL

The Cloud Composer URL can be found in the GCloud Console: Composer > {composer_env_name} > Environment Configuration > Airflow web UI.

The URL is provided in the following format:

https://<guid>.appspot.com