Perspectives

Resilience in edge operations

27 January, 2022 | Written by: Chris Nott and Richard Davies

Categorized: Perspectives

Share this post:

As we discussed in our first blog post, defence is on the cusp of a paradigm shift with the development of Multi Domain Integration (MDI) with many assets connected, often via sensors, in a ‘network of networks.’ In another post, we explored digital engineering extending to support in-service assets and digital twin.

The model implies heavy processing of data at a hub, with a command and control centre (C2) processing diverse data through a range of dimensions including virtual and physical deployed to meet defence and intelligence needs. In addition, the data can support operational management of an asset: calibration, servicing and repairs.

Hub processing

At the hub there might be a DevSecOps enabled platform producing continuous products (e.g. functionality upgrades) and continuous insights (e.g. situation monitoring).

One could foresee insights driving central decision making. For example, predictive models based on ‘deductive’ processing, or inductive logic that ‘infers’ a probable conclusion.

- Inference could be the identification of a potential threat is inferred from apparently unrelated information which is bound together by an artificial intelligence (AI) ‘learnt’ experience. For example, last time this set of events happened an asset like this failed and the solution was x.

- Deductive, is perhaps simpler, using rules to deduce that if a part is old and it’s getting hot therefore there is a known 80% chance it will need replacing soon.

This overall picture has merit, something that a military commander or an operational executive of a complex commercial supply chain or a manufacturer (supporting through life management) would probably relish with rich data providing near real-time insights and recommendations to support decision making. Further, AI could also have the ability to make decisions itself, e.g. order a spare and book a technician.

Edge processing

There is a further dimension, however. The emergence of ‘processing at the edge’ where insights are obtained and processed within an isolated asset, or ultimately a periodically disconnected asset.

In this way a remote asset might capture rich information from a variety of its sensors for example, and then apply the deductive or inductive reasoning described above to operational or intelligence scenarios. The advantage is that it can provide autonomous capability which is more resilient to threats.

But edge processing is more than this. A processor at the edge can exchange data with a hub given certain parameters, for example, only when secure communication is available or when the asset is in a certain secure location; or both. The word ‘exchange’ is important because it means that different networks can be up to deal with code upgrades, populating statistical models and insights. This would be enabled in the hub by a DevSecOps platform. The two-way transfer of data and the use of common models also plays to the Digital Twin concept.

Furthermore, edge processing could be a mini hub connected to a limited number of assets, or be a discrete network of multiple assets, each with edge processors.

Overall, this hub and edge processing has yet to mature but it will change the face of complex information and how assets are managed and how and where decisions are made. In some future state, AI will identify, respond to, and take action to manage risk and issues like the human immune system. But the frequency of autoimmune disorders in humans might suggest that care will need to be taken!

Implementation patterns

IBM and Red Hat have been developing technologies that start to meet these challenging demands faced in defence. Characteristics of the environment include being disconnected as well as intermittent and latent networking, plus any IT constraints for size, weight and power.

Red Hat OpenShift provides consistency for management and operations for containerised applications across cloud providers. But this is a ubiquitous platform that embraces edge infrastructure too, given that our defence clients want to extend what they’ve built out to the edge.

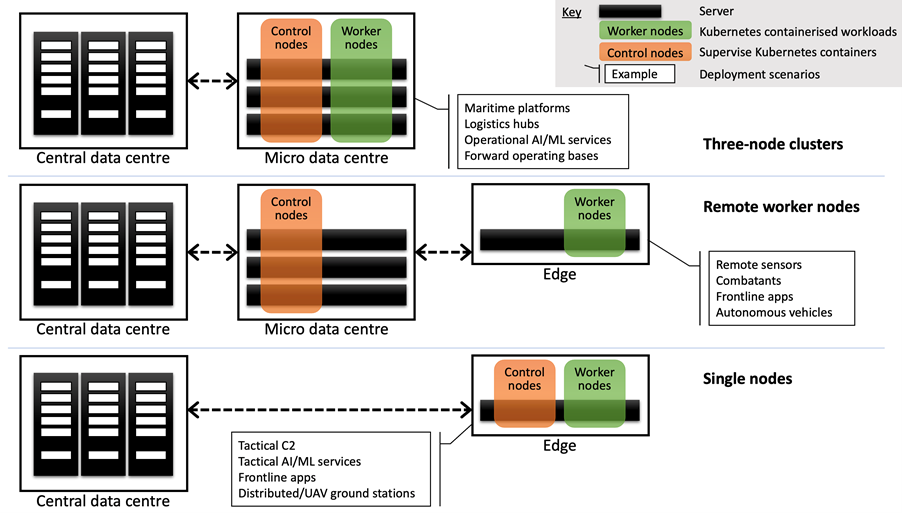

OpenShift offers the ability for defence to build, modify and mobilise functionality quickly that is aligned to managing assets, for example in response to a threat. In figure 1 below, we illustrate three edge architectural patterns that can be assembled as part of a broader Red Hat OpenShift solution around a central hub. Each pattern is made up of some or all of the following:

- Central data centres running the main applications of a defence organisation, probably in country

- Micro data centres where operational decisions are made like a base or complex ship

- Edge devices like a camera, or where tactical decisions might be made like a vehicle

The central data centre or the micro data centre can perform the role of the hub.

Figure 1: Edge deployment patterns

The three patterns shown using Red Hat OpenShift are as follows:

- Three node clusters each comprising three servers offering a smaller footprint and high availability for OpenShift. Three servers offer greater availability, processing power and resilience over a fewer number. This might be multiple solutions aggregated for operational decisions on a ship.

- Remote worker nodes are individual servers supervised by a local OpenShift control cluster. This might be a Control node supporting a remote device like a camera or a sensor.

- Single nodes that condense OpenShift without sacrificing features. This could be a single application (e.g. tactical C2) linked to the hub.

Furthermore, IBM and Red Hat have combined edge computing and AI to consider them as one, providing increasing levels of autonomous intelligence; calibrated and refreshed when linked to the hub. Solutions can be architected to handle network disruptions and the loss of data between the edge and the data centre by examining edge and AI in tandem.

One recent example is the KubeFrame for AI-Edge provided by Carahsoft. This is a three-node portable hardware and software cluster architected to handle unstable networks, and operates in a self-contained fashion to facilitate moving equipment from a vehicle into a ship, for example.

Red Hat’s Advanced Cluster Manager (ACM) and IBM’s Edge Application Manager (IEAM) combine to manage a deployed solution. ACM deploys and manages OpenShift clusters across multiple KubeFrames and IEAM performs application management across the topology.

In the future, MicroShift will facilitate additional opportunities for portability and management at the edge.

Defence use cases

IBM and Red Hat are seeing several interesting use cases:

- Aircraft Predictive Maintenance: Real-time insight into the status of deployed and at rest aircraft is derived from data integration and model development tooling running on top of OpenShift. Maintenance can be predicted based on certain criteria to give operators a heads-up rather than waiting for something to go wrong or for it to become an immediate need that disrupts missions. The three-node cluster pattern would apply to this example.

- Afloat systems: Hosting a simplified data centre on ships to run large amounts of applications in a disconnected state. Application development and cluster management would occur in a more traditional data centre and would then be pushed to the KubeFrame devices running OpenShift deployed on ships. New capabilities can be quickly built and deployed in response to evolving situations and the provision of AI/ML services in such environments supports autonomous systems at the tactical edge. The three-node cluster pattern would form the basis for this example, extended using the other two.

- Smaller footprint for increased technology capabilities: Single Node OpenShift is deployed on a platform like a fighter jet or vehicle where space is at a premium. A set of core applications need to be available to operators in real-time without requiring any additional skill sets. Software updates can be quickly fielded to deployed forces to sustain advantage.

- Remote field operations centres: Serves as a hub and spoke model deployed in harsh environments potentially using remote worker nodes. The operations centre would be the hub enabling in-theatre decisions to be made closer to the warfighter where data is collected. Various use cases are possible, essentially providing a portable solution so that when the field operations centres move, they can easily bring the required hardware and software with them from hub to hub. In addition, software capabilities can be updated in response to changing battlespace conditions.

A coherent approach to management and operation of infrastructure and applications infused with data and AI is an essential theme of all these use cases. Furthermore, the autonomy and takeover of C2 at the edge, for example, from loss of connectivity, must also be part of any implementation.

Security and resilience

The edge environment raises certain types of threats, especially those relating to physical intervention, spoofing and tampering. The sensors, processing and communications must be designed with security and assurance in mind across these specific vectors as well as more traditional concerns such as encryption and verification.

Security and resilience must be woven across the core and edge to command trust, not just within nations but across coalitions.

Call to action?

These enablers facilitate the interoperability that is necessary to build and operate a flexible system of systems. Processing can be optimised in the topology with data, insights and actions all presented and executed where best at the time. It is the basis for trusted decision making at the speed of relevance.

These technologies can be tested in an agile manner and should be evolved with reference to a clear strategy and a defined ‘Framework’. Explore more in the Manufacturing 4.0 meets Defence 4.0 blog post.

Global Technical Leader for Defence & Security

Enterprise Strategy - Defence Lead IBM

Frontier Fusion: Accelerating the Path to Net Zero with Next Generation Innovation

Delivering the world’s first fusion powerplants has long been referred to as a grand challenge – requiring international collaboration across a broad range of technical disciplines at the forefront of science and engineering. To recreate a star here on Earth requires a complex piece of engineering called a “tokamak” essentially, a “magnetic bottle”. Our […]

Safer Technology Change in the Financial Services Industry

Many thanks to Benita Kailey for their review feedback and contributions to this blog. Safe change is critical in keeping the trust of customers, protecting a bank’s brand, and maintaining compliance with regulatory requirements. The pace of change is never going to be this slow again. The pace of technology innovation, business […]

Unlocking Digital Transformation in Government

As the UK government embarks on its digital transformation journey, the challenges of adopting new technologies such as artificial intelligence (AI) and data-driven solutions are becoming more evident. From managing public trust to overcoming fragmented systems, the path is complex. Blake Bower and Giles Hartwright review the unique obstacles that the government faces and […]